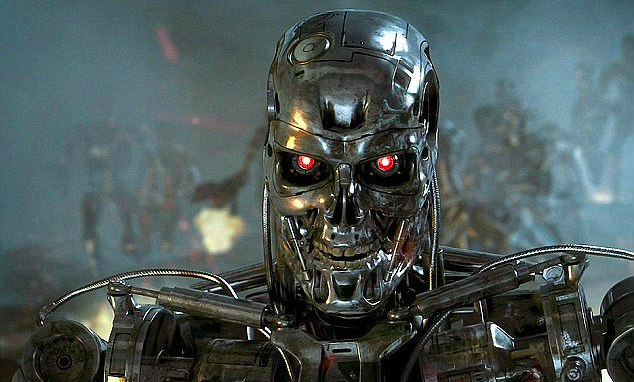

Today, algorithms may come in charming shapes, such as Sophia, a robot with a lovely attitude and an enlightened philosophy.

Others, like Atlas, are being built to look like Robocop brutes that can run, jump, and, maybe, shoot. Why not?

Regardless of how we, civilians, feel about it, Artificial Intelligence (AI) has entered the armaments industry. The world is testing electronic command and training systems, object recognition techniques, and drone management algorithms which provide the military with millions of photographs and other valuable data. Already, the decision to use an offensive weapon frequently is made by a machine, with humans left to decide only whether or not to pull the trigger. In the case of defensive weapons, machines often make autonomous decisions (to use the defensive systems) without any human involvement at all.

Which is scary. Will AI weapons soon be able to launch military operations independently of human input?

It’s clear that the military is developing smart technologies. After all, we owe many of the innovations we know from civilian applications to military R&D, including the internet itself (which began as Arpanet), email, and autonomous vehicles – all developed by the U.S. Defense Advanced Research Projects Agency (DARPA). But today modern weaponry relying on machine and deep learning can achieve a worrisome autonomy, although the military officially claims that no contemporary armaments are fully autonomous. However, it does admit that a growing proportion of arsenals meet the technological criteria for becoming fully autonomous. In other words, it’s not a question of if weapons will be able to act without human supervision, it’s a matter of when, and whether we allow them to choose targets to attack and carry those attacks out.

There is another consideration here worth noting. While systems still are designed to leave the final decision to human beings, the reaction time required time is frequently so short once the weapon has analyzed the data and chosen a target that it precludes reflection. With a half a second to decide whether or not to pull the trigger, it is difficult to speak of humans themselves in those situations as being fully autonomous.

Thinking weapons around the world

Human Rights Watch, which has called for a ban on “killer robots,” has estimated that there are at least 380 types of military equipment that employ sophisticated smart technology operating in China, Russia, France, Israel, the UK, and the United States. Much publicity has recently focused on the company Hanwha, a member of a group of the largest weapon manufacturers in South Korea. The Korea Times, calling it the “third revolution in the battleground after gunpowder and nuclear weapons,” has reported that together with the Korean Advanced Science and Technology Institute (KAIST), it is developing missiles that can control their speed and altitude and change course without direct human intervention. In another example, SGR-A1 cannons placed along the demilitarized zone between South and North Koreas reportedly are capable of operating autonomously (although programmers say they can’t fire without human authorization).

The Korean company Dodaam Systems makes autonomous robots capable of detecting targets many kilometers away. Also, the UK has been intensively testing the unmanned Taranis drone, set to reach its full capacity in 2030 and replace human-operated aircraft. Last year, the Russian government’s Tass news agency reported that Russian combat aircraft will soon be fitted with autonomous missiles capable of analyzing a situation and making independent decisions regarding altitude, velocity, and flight direction. And China, which aspires to become a leader in the AI field, is working hard to develop drones (especially those operating in so-called swarms) capable of carrying autonomous missiles that detect targets independent of humans.

A new bullet

Since 2016, the U.S. Department of Defense has been creating an artificial intelligence development center. According to the program’s leaders, progress in the field will change the way wars are fought. Although former U.S. Deputy Secretary of Defense Robert O. Work has claimed that the military will not hand power over to machines, if other militaries do, the United States may be forced to consider it. For now, the agency has established a broad, multi-billion-dollar AI development program as core to its strategy, testing state-of-the-art remotely-controlled equipment, such as the Extreme Accuracy Tasked Ordnance (EXACTO), a .50 caliber bullet that can acquire targets and change path “to compensate for any factors that may drive it off course.”

According to experts, unmanned aircraft will replace piloted aircraft within a matter of years. These drones can be refueled in flight, carry out missions against anti-aircraft forces, engage in reconnaissance missions, and attack ground targets. Going pilotless will reduce costs considerably as pilot safety systems in a modern fighter aircraft may add up to as much as 25% of the whole combat platform.

Small, but deadly

Work is currently under way to tap into the potential of so-called insect robots, a specific form of nanobot that according to the American physicist Louis Del Monte, author of the book Nanoweapons: A Growing Threat To Humanity, may become weapons of mass destruction. Del Monte argues that insect-like nanobots can be programmed to insert toxins into people and poison water-supply systems. DARPA’s Fast Lightweight Autonomy program involves the development of house-fly-sized drones ideal for spying, equipped with “advanced autonomy algorithms.” France, the Netherlands, and Israel reportedly are also working on intelligence gathering insect drones.

The limits of NGO monitoring and what needs to happen now

Politicians, experts, and the IT industry as a whole are realizing that the autonomous weapons problem is quite real. According to Mary Wareham of Human Right Watch, the United States should “commit to negotiate a legally binding ban treaty [to]… draw the boundaries of future autonomy in weapon systems.” Meanwhile, the UK-based NGO Article 36 has devoted a lot of attention to autonomous ordnance, claiming that political control over weapons should be regulated and based on a publicly-accessible and transparent protocol. Both organizations have been putting a lot of effort into developing clear definitions of autonomous weapons. The signatories of international petitions continue to attempt to reach politicians and present their points of view during international conferences. One of the most recent international initiatives is this year’s letter signed by the Boston-based organization Future of Life Institute in which 160 companies from the AI industry in 36 countries, along with 2400 individuals, have signed a declaration stating that “autonomous weapons pose a clear threat to every country in the world and will therefore refrain from contributing to its development.” The document was signed by, among others, Demis Hassabis, Stuart Russell, Yoshua Bengio, Anca Dragan, Toby Walsh, and the founder of Tesla and SpaceX Elon Musk.

However, until an open international conflict arises that will reveal what technologies are actually in use, keeping track of the weapons that are being developed, researched and installed is next to impossible.

Another obstacle faced in developing clear binding standards and producing useful findings is the nature of algorithms. We think of weapons as material objects (whose use may or may not be banned), but it is much harder to make laws to cope with the development of the code behind software, algorithms, neural networks and AI.

Whom to blame? Algorithms?

Another problem is that of accountability. As is the case with self-driving vehicles, who should be held accountable should tragedy strike? The IT person who writes the code to allow devices to make independent choices? Neural network trainer? vehicle manufacturer?

Military professionals who lobby for the most advanced autonomous projects argue that instead of imposing bans, one should encourage innovations that will reduce the number of civilian casualties. This is not about algorithms having the potential to destroy the enemy and civilian population. Rather, they claim, their prime objective is to use these technologies to better assess battlefield situations, find tactical advantages, and reduce overall casualties (including civilian). In other words, it is about improved and more efficient data processing.

However, the algorithms unleashed in tomorrow’s battlefields may cause tragedies of unprecedented proportions. Toby Walsh, a professor dealing with AI at the University of New South Wales in Australia warns that autonomous weapons will “follow any orders however evil” and “industrialize war.”

Artificial intelligence has the potential to help a great many people. Regrettably, it also has the potential to do great harm. Politicians and generals need to collect enough information to understand all the consequences of the spread of autonomous ordnance.

. . .

Works cited

YouTube, BrainBar, My Greatest Weakness is Curiosity: Sophia the Robot at Brain Bar, link, 2018.

YouTube, Boston Dynamics, Getting some air, Atlas?, link, 2018.

The Guardian, Ben Tarnoff, Weaponised AI is coming. Are algorithmic forever wars our future?, link, 2018.

Brookings, Michael E. O’Hanlon, Forecasting change in military technology, 2020-2040, link, 2018.

Russell Christian/Human Rights Watch, Heed the Call: A Moral and Legal Imperative to Ban Killer Robots, link, 2018.

The Korea Times, Jun Ji-hye,Hanwha, KAIST to develop AI weapons, link, 2018.

Bae Systems, Taranis, link, 2018.

DARPA, Faster, Lighter, Smarter: DARPA Gives Small Autonomous Systems a Tech Boost, Researchers demo latest quadcopter software to navigate simulated urban environments, performing real-world tasks without human assistance, link, 2018.

The Verge, Matt Stroud, The Pentagon is getting serious about AI weapons, link, 2018.

The Guardian, Mattha Busby, Killer robots: pressure builds for ban as governments meet, link, 2018.

. . .

Related articles

– Artificial intelligence is a new electricity

– Only God can count that fast – the world of quantum computing

Piotr91AA

I’m actually and genuinely more scared by this comment, knowing that even when women are literal objects and should stick to being an AI and fulfill the only purpose of its existence, there will still be an agenda to “liberate” them

CaffD

Can someone explain what this is?

Are these literally chips to give your robotic different emotions…? Or is this some novelty thing like the “force in a jar” Starwars shit.

Zoeba Jones

OpenAI CEO Sam Altman joins Azeem Azhar to reflect on the huge attention generated by GPT-3 and what it heralds for the future research and development toward the creation of a true artificial general intelligence (AGI).

They also explore:

– How AGI could be used both to reduce and exacerbate inequality.

– How governance models need to change to address the growing power of technology companies.

– How Altman’s experience leading Y Combinator informed his leadership of OpenAI.

John Macolm

I think the applications go far beyond military use tho. Don’t think it would advantagous to hide it up your sleeve. If the second it is obtained it instantly makes that nation the number one super power, then why would you wait to unveil it?

Jang Huan Jones

Interesting concern. If you havent read this you really should: The Rise of the Weaponized AI Propaganda Machine

Even if its completely untrue it gives a taste of what the future may hold. Having said that, the issue of an AI driven war between superpowers and the issue of AI being used against a certain political ideology are orders of magnitude in difference. One is an existential threat to the entirety of humanity and the other is not.

Guang Go Jin Huan

Robots are already being built that have some of these brain mechanisms operating on neuromorphic chips, which are computer chips that mimic the brain by implementing millions of neurons. So maybe robots could get some approximation to human emotions through a combination of appraisals with respect to goals, rough physiological approximations, and linguistic/cultural sophistication, all bound together in semantic pointers. Then robots wouldn’t get human emotions exactly, but maybe some approximation would perform the contributions of emotions for humans.

John Macolm

AI is a huge field with tons and tons of different applications. They’re not racing to finish some common product. Anything where you might have a computer make decisions for you, find correlations in data, make predictions, this is all stuff where AI might be extremely applicable. We have the computing power and the data to do crazy things with it, and it’s huge now. And it’s really, really hard to do well. The math is hard and PhD starts being pretty much mandatory for serious research. They scoop them up because there aren’t enough people who can do it well to cover the market.

Krzysztof X

The big catch here is how you train these algorithms to make sure that any bias conscious or unconcious is not propagated to the algorithm. I think this is where the ethics come in play along with rules and legislations to make sure that even if we don’t understand the details of the decision process the outputs are fair given the inputs.

Adam T

They actually do it now

Adam Spark Two

That’s why I’m saying we’ll have to do it the hard way first. That is, a structural simulation instead of a procedural one. We already can simulate networks of neurons that reproduce brain behavior, but it would take everything that Google owns to even get close to having the hardware it would take to run a full human brain. Most of what would be needed to copy a brain to such a machine already exists, it’s just needs to be refined and applied. After we have that structural simulation, we can use it to do the research needed to develop a procedural one. A procedural simulation wouldn’t have nearly the hardware requirements, but we can’t do it without the structural one.

CaffD

The problem with regulating it is that the people who are willing to do things illegally will have a distinct advantage. The AI that leads the race is more likely to belong to someone with malicious intent.

Mac McFisher

I still think an AGI would want and need humans for a very long time to come. Soft power is the lesson it’ll learn from us, I feel. Why create bodies when you can co-opt social media and glue people to their devices for your own benefit?

From an AGI perspective, maybe we just keep slipping out of our harnesses.

John Accural

I think AI is going to take us to World War III, in which opposed nation states do everything to undermine each others social, economic and political infrastructures. Security of systems will be thrown on its head, and human beings will lose the ability to keep up with threats and developments.

Piotr91AA

Rights Watch called for a ban on “killer robots,” after estimating that there are at least 380 types of military equipment that employ sophisticated “smart” (AI) technology IN Operation in China, Russia, France, Israel, the UK, and the United States.

NorTom2

Until now we still didn’t know when we will have good Natural Language Understanding.

This is very important and useful for many people and many different sectors

Peter71

Currently, many countries are researching battlefield robots, including the United States, China, Russia, and the United Kingdom. Many people concerned about risk from superintelligent AI also want to limit the use of artificial soldiers and drones.

AndrewJo

The algorithm was able to predict ethnicity and age well but surprisingly, NOSE SHAPE. There are of course other variables at play that affect our voice, but this mainly focused on generating frontal images (thus nose shape was what they picked up on).

Perhaps we should be asking how has your voice changed after mewing?

John Accural

10 years and we are on human level (in general so not single task)

Jang Huan Jones

Who is the hacking group that is working on trying to neutralize any weaponized robotic threat including Alphabet/Google/Boston Dynamics?

SimonMcD

Historically, there’s been a lot of hype and unjustified optimism surrounding AI. While we’ve made good progress on focused applications thanks to improvements in hardware and data, I’m also highly skeptical about the feasibility of AGI in the foreseeable future. We don’t even have a good model for brains and thought right now.

https://en.wikipedia.org/wiki/AI_winter

PiotrPawlow

Machines don’t possess “behavior” Norbert – behavior is a purely human activity and trait — as is “accomplishment”.

You’re article is creepy — time to get out of the dark room and interact with the humans you seem to so insistent to discount and diminish.

Krzysztof X

An interesting article. Thanks for the share Norbert Biedrzycki

The massive measures started as a reaction to the “pandemic” can be described a little bit broader, I guess . If somebody call them “extraordinary measures of social surveillance and control” , he would not completely be wrong. To describe them in short: A,the introduction of applications for society-wide contact tracing

B. Building units to enforce tracing and isolation of citizens

C. The introduction of digital biometric ID cards that can be used to control and regulate participation in social and professional activities.

D. The expanded control of travel and payment transactions (e.g. cash abolition).

E. The creation of legal foundations for access to the biological systems of citizens by governments or corporations (through so-called “mandatory vaccinations”).

This is what we need to think about as that is not the Black Mirror series but this is something in construction and again not to fight a global threat to our civilisation but as a reaction to a disease that has the lethality of a flue.

John Macolm

AI is a huge field with tons and tons of different applications. They’re not racing to finish some common product. Anything where you might have a computer make decisions for you, find correlations in data, make predictions, this is all stuff where AI might be extremely applicable. We have the computing power and the data to do crazy things with it, and it’s huge now. And it’s really, really hard to do well. The math is hard and PhD starts being pretty much mandatory for serious research. They scoop them up because there aren’t enough people who can do it well to cover the market.

Check Batin

Once something like this is developed for military application, the technology advances at lightening speed. The project the google people worked on is probably old news compared to the systems they’ve advanced in-house. This is just a PR piece.

CaffD

Notably, the private sector’s involvement in the AI-ethics arena has been called into question for potentially using such high-level soft-policy as a portmanteau to either render a social problem technical or to eschew regulation altogether. Beyond the composition of the groups that have produced ethical guidance on AI, the content of this guidance itself is of interest. Are these various groups converging on what ethical AI should be, and the ethical principles that will determine the development of AI? If they diverge, what are these differences and can they be reconciled?

Jang Huan Jones

Every new technology has some jackass running around screaming it will end the world and this is no different.

Oscar2

Signed languages are complex languages that include facial expressions, body movement, and eye gaze as part of the grammar. Just by focusing on the hand you are missing 3/4 of the language particularly since the meaning of signs are modified by the missing grammar. It’s clear that folks are trying to solve something they clearly know nothing about and are working on a technology that’s going to be riddled with inaccuracies.

Simon GEE

On the bright side, we may rid ourselves from boring repetitive jobs and be able to solve more complex problems with the assistance of AI. I also think AI could model a society without so much focus on keeping social barriers, when we could be a more effective society if we found a way to work together.

The downside is that technology evolves faster than our human institutions (government, culture… ), so we may panic as we tend to do when we face big changes and run amok.

I wish I could live long enough to see some evolution.

CaffD

I’ve noticed a LOT more rich people coming out about AI development needing to be regulated ever since I started working on an AI-CEO that could easily produce better results than a human CEO for a fraction of the cost; which would allow anyone to start up their own production and compete with the big boys.

Adam Spikey

Nice read Norbert

Norbert Biedrzycki

Thank you

AndrewJo

There’s no way to stop the development, just because it may benefit military; and I believe to set a ‘standard’ is naive thinking – will North Korea, Iran submit to the same standard. Will US and China no try to gain advantage one over another in this ‘standards’?

As with the nuclear powers – the industrial military power will be about a balance between the most powerful nations, and whether the most deadly weapons are deployed – relies on whether that balance is kept.

Check Batin

I’d say neural networks are Ai, it’s just the goal posts of Ai keep moving. Sure expert systems aren’t general artificial intelligence, but that’s not the point. IMHO Ai is here and the most important discussions and research to do are about reducing human suffering, working with other species, and getting off this planet.

Dzikus99

Machines don’t require frequent breaks and refreshments as like human beings. That can be programmed to work for long hours and can able to perform the job continuously without getting bored or distracted or even tired. Using machines, we can also expect the same kind of results irrespective of timings, season and etc., those we can’t expect from human beings.

Jang Huan Jones

People need to stop idolising Elon Musk. His words aren’t cliffhangers to analyse and deliberate. He has no credentials in this field; he doesn’t engineer the software powering Tesla vehicles; he’s just the CEO.

John Accural

Go ahead and multiply that by 100 for better accuracy.

CaffD

Scary

PiotrPawlow

Governance should be involved in technology. Just because some can build it we should follow. Pirates trying to capitalize on gains through technology should be questioned and not be allowed to set the stage for developing technology for humanity. https://www.youtube.com/watch?v=o8_imzEdS84

Check Batin

I’m watching the “Chernobyl” show on HBO. What strikes me about it is how similar it is to a Deep Learning project in a large company. No one really knows what is going on, management passes up only the shiny numbers, while the technicians clumsily manipulate hyperparameters in such a way as to cause explosions, power failures, budget overruns, colossal wasting of time, and near-radioactive reputational damage to everyone involved. Deep Learning is a brute force search for correlations, most of which are spurious and useless. It assumes a differential error surface that most data, being discrete, doesn’t have. It is data-hungry. It usually only finds a local minimum. It takes forever to train, and even longer to grid-search the optimal hyperparameters. Ten years after its rise in popularity (and 50 years after the invention of the first neural networks), researchers have found no extremely reliable countermeasures to overfitting. Who ever said the Cold War is over? The USSR is alive and well in tensorflow!

Robert Kaczkowski

Hope that not

John Macolm

We already have one. The information on it was released in the Snowden dump. At the time of the information release it was fed internet and phone meta data on 100 million Pakistanis and used to pick targets for drone execution in the Pakistan Afghan border area.

No joke, it’s called skynet.

Several years ago there was a big push in the media about how meta data wasn’t being used to track individuals. That was propaganda to try to hide the fact that they had the capability to do it years ago. Now they can likely do it to at least the entire US population if not the world.

TomaszK1

Good one. Happy to share further

Norbert Biedrzycki

US intelligence community says quantum computing and AI pose an ’emerging threat’ to national security

https://pbs.twimg.com/media/D-ojYIUXkAA9DOW.png:large

Norbert Biedrzycki

Check Batin

It’s not a “general AI”, but a “specialized AI”. “AI” is still the correct term.

Yes, could be that the term has not been defined perfectly since “intelligence” is a broad term, but calling it “AI” is correct w.r.t. how the term has been defined and how it is used.

CaffD

But hypothetically speaking, we could also use true web AI to develop websites that adapt to people’s ways of using them. By combining TensorFlow.js with the Web Storage API, a website could gradually personalize its color palette to appeal more to each user’s preferences.

https://thenextweb.com/news/ai-creativity-will-bloom-in-2020-all-thanks-to-true-web-machine-learning/amp

Tom Jonezz

Unfortunately, I doubt that it matters what any of us want. The day is coming, and fast, when the degree of computer automation in what we might call the ground traffic control system will rival or exceed the degree of automation in the air traffic control system. Some will say that the day is coming much too fast and in too many spheres. Computers are already in almost complete control of the stock market. They’re gradually taking over medical diagnosis. Some even want to turn sentencing decisions over to them. Perhaps things are getting out of control.

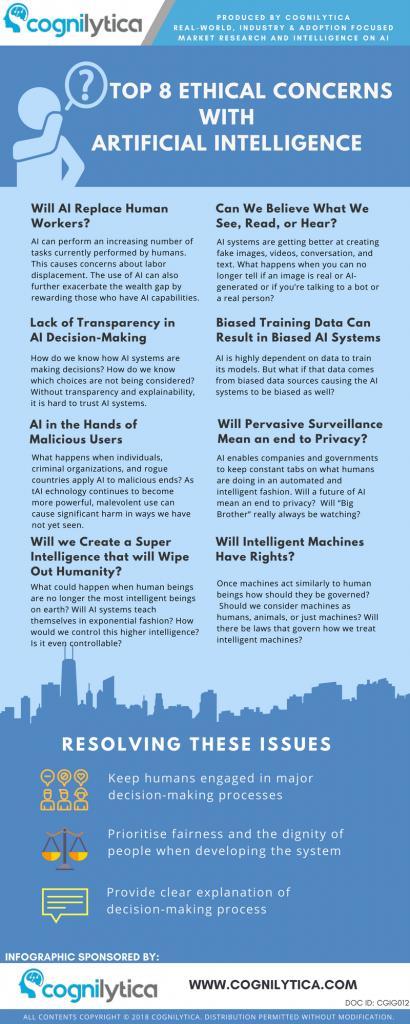

Norbert Biedrzycki

Top 8 Ethical Concerns for AI

Oscar P

No matter how great you could ever construct any algorithm or how impervious you might build a tool to wield your altogether flawless algorithm even with some purposefully double-blind mechanisms to offer unfettered constraint where may be applicable; even in that, there are always a nubmer of separate variables required to compute anything therefore, even if for this example in your article, the social wisdom or intellectual value of the algorithm was 100,000,000,000 (100 Billion) times the value of anything it judged/digested to social interpretation to achieve a better more balanced.

Zidan78

Scarry perspective. BTW: Which game is it?

John Accural

Can a robot truly make a good choice about taking someone’s life? Not without a huge amount of information being input. Can a robot handle that? Absolutely. Can a robot handle that autonomously? Without any human or computer interaction? Merely on firsthand experience and whatever intel they were given? Can a robot, completely on its own, decide the fate of a human being based on these things and not a simple hitlist?

Tom Jonezz

Sometimes an AI is tasked with a very subjective question, like finding the ‘best’ job applicant. Bias is almost unavoidable because the machine has to make some kind of judgment. Someone is always going to look at that judgment as bias.

John Accural

Im assuming you mean the “beeing able to learn” AI, not the scripted AI, e.g. the way Alexa works atm.

AI will get more integrated in things like childrens toys, computer games. Digital assistants will get better at actually learning from me, atm the companies more watch and adjust it globally, e.g. in future Alexa will understand that when I ask “how cold is it ouside” I dont want to know the 24h min temp, but the current weather.

I dont think there will be the huge jump where we have commander Data, or Skippy the Magnificent but it will improve.

Id say cs class in schools would ad ai, but when I think about what cs class is today, and the speed at which politicians actually adapt to new technology and developments, I’d say 5 years isnt enough for that

Jang Huan Jones

Unless you are of the opinion that humans are somehow special or have a “soul” a human is basically a biological machine. Our brain transmits electrical impulses through neurons, and the whole is greater than the sum of its parts, what we call “consciousness”. We already see similar phenomenon in other parts of nature. Think of an ant colony, an individual ant is not that smart, however, the “colony” can protect itself and seems to operate on a higher level of intelligence than one would imagine if they examined thousands of ants individually. The ants don’t have a complex language we can see and yet the colony can defend itself, move itself, build complex structures no individual ant would understand. This is called emergent intelligence. The colony itself is much smarter than adding the intelligence of the ants together, and this is a process we don’t really understand yet on a fundamental level.

So we replicate this using electronics and transistors instead of neurons. Maybe this requires making something of similar complexity to the human brain, with millions or billions of individual processors. Could be 10 or 100 or 1000 years away with current technology. We build a machine that has the equivalent of what we would call consciousness. First problem, machines are much more efficient at moving data than human brains. Right now, human brains are orders of magnitude more complex than our best machines, and they can do things machines can’t. But this will not be the case forever. Think of how much faster computer can do math problems compared to the best mathematicians. Think of how computers can now beat the best humans at chess, or Jeopardy. Now imagine a computer that can do those things, but can also THINK like a human.

Guang Go Jin Huan

That’s the kind of interactions we want for our robots. We want to mimic the human-human interaction

Zoeba Jones

Yes, robots are unfeeling machines that make decisions based on numbers and somehow the one that picks the targets is not responsible anymore. It’s blaming the bullet and not the one who pulls the trigger.

SimonMcD

When you speak to philosophers, they act as if these systems will have moral agency. At some level a toaster is autonomous. You can task it to toast your bread and walk away. It doesn’t keep asking you, ‘Should I stop? Should I stop?’ That’s the kind of autonomy we’re talking about.

TomCat

That DARPA funding could theoretically seed the rescue-robot industry, or it could kickstart the killer robot one. For Gubrud and others, it’s all happening much too fast: the technology for killer robots, he warns, could outrun our ability to understand and agree on how best to use it. Are we going to have robot soldiers running around in future wars, or not? Are we going to have a robot arms race which isn’t just going to be these humanoids, but robotic missiles and drones fighting each other and robotic submarines hunting other submarines?

Check Batin

It doesn’t matter what laws pass, what company reject it, or how much society is against it. United States government will ALWAYS be looking to upgrade weaponry in order to consistently be the greatest military on the planet. If certain upgrades are illegal, they will just do it undercover.

John Macolm

I cant seem to wrap my mind on what this actually means. What exactly would a neural network do that gives any country a military advantage? Predict missile strikes? I think many ppl who dont actually understand how NNs work don’t realize that they aren’t some magical technology that can predict everything.

Jang Huan Jones

The first thing this machine is going to do is examine it’s own code. Humans could not write software with 100% precision. Assume this machine is about as smart as a pretty good, but not the best, software developer…except it can write code and move data 1000s of times faster than a human developer. It can do the work of hundreds of developers in a fraction of the time.

So, it begins to improve itself, comb through it’s code and remove inefficiencies. It doesn’t need to program in C or C++, it can directly manipulate machine code 0s and 1s and read it as easily as you are reading this. It can squeeze every bit of processing power out of its components. As it improves itself it becomes increasingly efficient, where once it was thousands of times faster than human now it is a million or a billion. Suddenly it can do what would take a team of coders decades in a single afternoon.