The progress seen in IT over the last few years is truly mind-boggling. And yet the computational power of classical computers appears to be limited. Therefore everyone whose sights are set on boundless technological advances turns their attention to a technology promising to deliver another big breakthrough – quantum computing.

Although they have been talked about since the 1980s, it has only become clear in the last few years that of quantum computers are definitely on the rise. The hype around them has been growing ever stronger. Experts have gone as far as to concede that we are about to witness a computational speedup of unprecedented proportions.

How can that be? Today’s processors comprise billions of transistors the size of a few nanometers packed into a very small space. According to Moore’s law, the number of transistors fit into a microprocessor doubles roughly every two years. Unfortunately, increases in the processing power of processors have been nearing a plateau. We are approaching the technological limits of how many transistors can be “jam-packed” into such a small space. The borderline that cannot physically be crossed is a transistor the size of a single atom with a single electron used to toggle between the states of 0 and 1.

This year may well bring a breakthrough in the development of the quantum technology. What makes the latest prediction significantly more likely to come true is the recently massive involvement in research in the field by Microsoft and Google.

Atos, a company I am pleased to represent, has also invested considerable amounts of energy over the last few months into bringing us closer to constructing a superfast quantum computer.

The source of the big advantages of quantum computing

The simplest way to explain this is to compare quantum and classical computers. The familiar conventional device we know from our daily work relies on the basic information units called bits for all of its operations. These, however, can only represent either of the two states of 0 or 1.

The talk in quantum computing is of using intermediate states that liberate us from the bounds of the two opposing values. The qubit (or quantum bite), which is what the information units used by quantum devices are called, have the capacity to assume the values of 0 and 1 simultaneously. In fact, they can assume an infinite number of states between 0 and 1 achieving what is referred to as the superposition. Only when the value of a qubit is observed does it ever assume either of the two basic states of 0 or 1.

This may seem like a minor difference but a qubit remaining in a superposition can perform multiple tasks at the same time. We are helped here by the operation of two fundamental laws of quantum physics. Physically, a qubit can be represented by any quantum set to two different basic states such as an electron or atom spin, two energy levels in an atom or two levels of photon polarization: vertical and horizontal.

While in a classical computer, a bit holds two values, two bits holds four values, and so forth, two qubits hold not one but four values at any given time while 16 qubits may hold as many as 256 values simultaneously. Therefore, with every qubit added, the number of possibilities increases by a factor of two. The direct consequence of this is that, unlike a conventional machine, a quantum computer can perform multiple operations simultaneously.

Put more precisely: the above principle allows a quantum machine to process enormous amounts of data within an incredibly short time. Image a volume of data so big it would take millions of years to process by means of a classical computer.

However, this completely unthinkable task becomes quite workable with the use of a quantum machine. The device can process data hundreds of thousands, and ultimately, millions of times faster than machines made up of sophisticated silicon components! An ideal application for such a computer would be to sift through and recognize objects in an enormous collections of photographs. They would also be perfect for big number processing, encryption and code breaking. By resorting to mathematical data, the difference in capacity between quantum and conventional computers can theoretically amount to an astounding 1:18 000 000 000 000 000 000 times!

Deep freeze and full isolation

The most credit for practical applications in the field can be ascribed to the Canadian tech company D–Wave. Its clients include various institutions ranging from government agencies to NASA, and for-profit corporations, such as Google. It is D-Wave that engineered the world’s first machine referred to as a quantum computer, also named D-Wave.

A far more advanced machine is the D-Wave 2X™ System. Its processor generates no heat and operates at temperatures of 0.015 degrees Kelvin (-273°C), which is 180 times colder than interstellar space! The processor’s environment is a vacuum with pressures 10 billion times lower than atmospheric pressure!

Qubits must be fully isolated from the environment as they are highly sensitive and easily damaged by e.g. changes in outdoor temperature, outdoor radiation, light or collisions with air molecules. That is why vacuum, super low temperatures and a fully shielded environment are necessary.

Although news about a device that deserves to be called a quantum computer has been released and denied many times already, the D-Wave 2X™ System brings us a step closer to producing a machine for which nothing is impossible. The D-Wave was immediately put to work on machine teaching, artificial intelligence and solving well-known biology problems such as protein twisting. However, note that over the last few years, a number of skeptics and experts have been passionately challenging the claims that are electrifying journalists and business people.

Perhaps it is science fiction

To shed light on just how interesting and controversial quantum computing really is, allow me to provide examples of some of the extreme reactions from the IT community. A Google employee has recently claimed that the quantum computer D-Wave solved, within 1 second, a problem that a standard machine would supposedly need 10 000 years to complete! On the other hand, many opinions resemble that of the physicist Matthias Toyer. When, in a special test three years ago, the D-Wave2 was said to have solved a problem assigned to it 3600 times faster than a classical computer, Toyer questioned the result pointing out the lack of hard evidence to prove such efficiency. The confusion that arose in the field can best be illustrated by a quote from an employee of the National Institute of Standards and Technology in the USA, David Wineland, who said: “I’m optimistic [we’ll succeed] in the long term, but what ‘long-term means’ I don’t know”.

Perhaps we should give credence to the opinions of IBM experts who believe it will take at least a dozen years for such wonders of technology to come into practical use. Their view is that while such devices will inevitably be designed for research institutes and laboratories, there is little hope of them every coming to average users. All things considered, it is difficult today to make predictions with any degree of certainty.

What is in it for us?

A quantum computer requires a control system (an equivalent of an operating system), algorithms that allow one to make quantum calculations and proper calculation software.

The development of quantum algorithms is very difficult as they need to rely on the principles of quantum mechanics. The algorithms followed by quantum computers rely on the rules of probability. What this means is that by running the same algorithm on a quantum computer twice, one may get completely different results as the process itself is randomized. To put it simply, to produce reliable calculation results one has to factor in the laws of probability.

This sounds like a highly complex process. Unfortunately, it is. Quantum computers are suited for very specialized and specific calculations as well as algorithms to help harness all of their powers. In other words, quantum computers will not appear on every desk or in every home. Using them there make no sense.

Regardless of how much time is needed to generate a given result from the work of an algorithm, we can imagine, even today, a situation in which a quantum machine is required to solve a specific problem. Assume, for instance, that we want to process massive amounts of medical data to find a cure of cancer. A quantum computer using adequate algorithms could bring us closer to developing an effective formula. Neither the human brain nor any classical computer could possibly interpret all such data without errors or without overlooking significant information.

Quantum technologies have the potential to powerfully influence astronomy, mathematics, physics and other fields. Quantum computers can instantaneously sift through huge amounts of data. This, in fact, may be the main reason why special agencies and Google invest so much in the technology. Quantum computers may make ideal code breaking tools. They can make quick work of cracking systems relying on the RSA method, which protects Internet browser lines and lines used for mobile and online banking. Quantum computing is declared to be the first technology threaten the cryptographic algorithms of the blockchain and cryptocurrencies such as the bitcoin.

Evidently, the technology is not all advantages. There is definitely a dark side to it as well. Will we be able to continue to rely on encryption as we know it today? Will we be able to protect our critical and previously unbreakable codes from being cracked? What will the world be like if any bit of information is available within a blink of an eye? Instantaneously, indeed, but not for all as access will be limited to those who possess quantum computers and quantum computing technology.

Related articles:

– The invisible web that surrounds us, i.e. the internet of things

– According to our computers … You don’t exist

– Blockchain – the Holy Grail of the financial system?

– A hidden social networks lurks within your company. Find it!

– Blockchain has a potential to upend the key pillars of our society

– Artificial Intelligence – real threats or groundless fears?

– Work of the future – reinventing the work

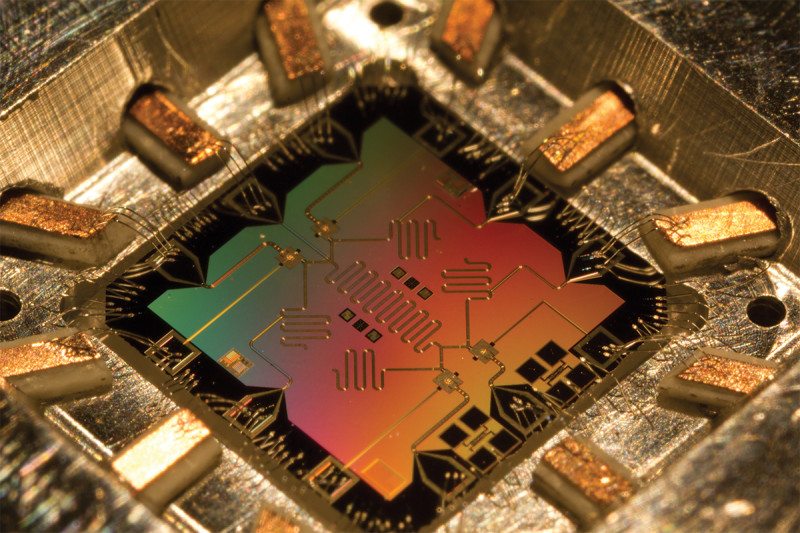

Picture 1: Quantum computer processor qubit.jpg: Qubit placed in the center of a processor

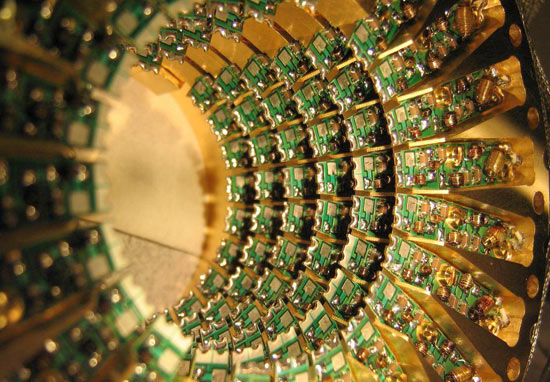

Picture 2: Quantum computer funnel.jpg: Plates distributed cylindrically in a quantum computer tunnel

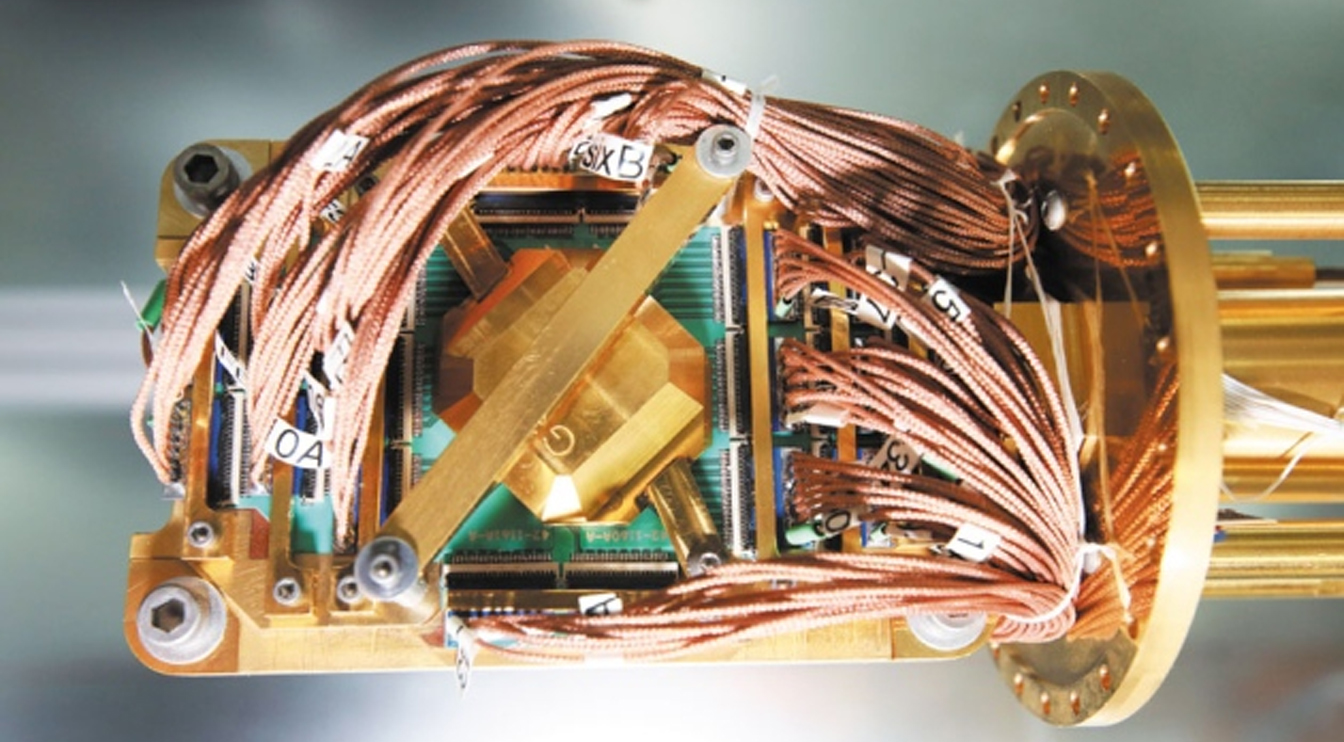

Picture 3: Quantum computer cooling.jpg: Circuit case between liquid-helium-cooled heat exchangers

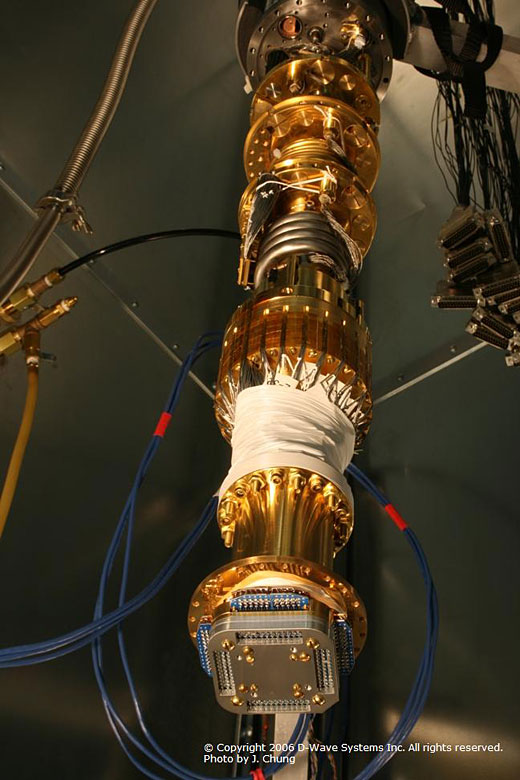

Picture 4: Quantum computer processor mounted.jpg: Processor mounted at the end of a tower with heat exchangers and circuit control units

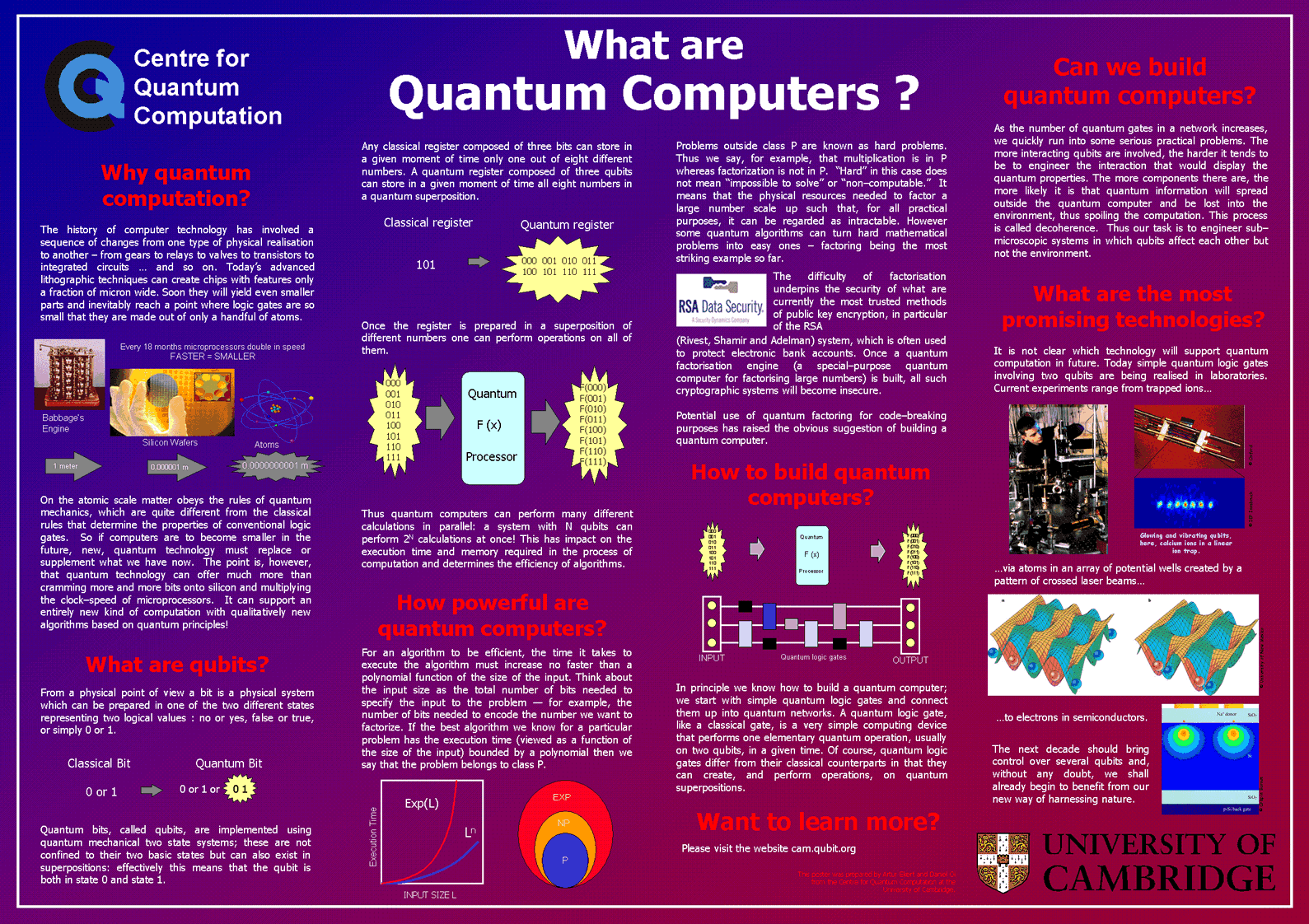

Picture 5: What are the quantum computers?; University of Cambridge

And99rew

Non-quantum algorithms that are used to analyze the data can predict the state of the grid, but as more and more phasor measurement units are deployed in the electrical network, we need faster algorithms.

CaffD

If a problem is being analyzed under Complexity theory, then by definition it is not an impossible problem, as complexity theory only works on computable problems. The study of “impossible” problems is the helm of computability theory, so it is not much of a stretch for a CS to assume they were talking about computability when they talk about “impossible problems”.

So, the title implied it was a breaktrough in computability theory (discovery of a new set of problems that was computable for a QC but incomputable for a Classic Turing machine), but the actual article is about a breakthrough in complexity theory.

Norbert Biedrzycki

If an abacus is Turing complete, then so is a pen and a napkin, a chalkboard and chalk, bits of string, or a bag of anything. Because you can add a human to any of them and having a Turing machine. But you don’t need any of them because a human can just use their imagination. So none of those things are Turing complete by themselves, and none of them can be used to make something that wasn’t Turing complete to now be so.

Norbert Biedrzycki

Quantum computers are similar, but with an extra tool in the toolbox. You can poke quantum states in ways equivalent to flipping a coin, but QM can manipulate those probabilities as waves propagating through the state space. The magic happens when those probability waves are aligned just so, and some of them add up while others cancel out. It turns out there are a lot of problems where you can set up most of the results you don’t want to un-happen. Basically, given that quantum mechanics lets you do things with counterfactuals and correlations like measure interactions that never happened, never mind the Bell Inequality, it’s to be expected that it can do more things even than letting the computers flip coins normally.

Oscar2

A full-blown quantum internet, with functional quantum computers as nodes connected through quantum communication channels, is still some ways away, the first long-range quantum networks are already being planned.

AndrewJo

Good one. Every time information on this subject appears, it is worth reminding the precursor Raymond Kurzweil. Thank you very much for this publication, here lies the biotechnology and artificial intelligence.

johnbuzz3

The Gartner Report states that within 4 years, 20 percent of corporations will have a budget for quantum computing. Within ten years, it should be an integral component of technology.

Norbert Biedrzycki

The word “impossible” is wrong in this context for two reasons:

– Any classical computer (be it a theoretical Turing machine, or simply your phone) would happily solve the problem given a small enough data input. The problem on itself is not impossible for classical computers, it just quickly becomes unmanageable as the size of the input increases.

– We all “knew” about quantum efficiency advantage, the thing that is new, what this article brings to the table, is the fact that it presents a mathematical proof. Mathematical proofs are the helm of math and theoretical computer-science, and in those fields the term “impossible” doesn’t mean “efficiency disparity”, it doesn’t mean “impossible with current tech” or even “impossible in the confines of the event horizon of the observable universe”, it means “impossible even for an imaginary Turing machine with infinite ribbon running for infinite time”.

CaffD

The researchers at IBM were careful not to use the word “impossible” in the paper or in their press release, so I’m just being as pedantic as the authors of the article themselves. In fact the press release is brilliantly written, being accessible without being misleading (unlike the title of this post).

And there is nothing mathematically impossible about my assumption. Yes, it is known that the standard qubit-model is Turing equivalent, but there is no proof that this is extends to the whole of quantum physics (we can’t prove the negative: there is no possible turing-superior machine).

Norbert Biedrzycki

I really didn’t know you could use traditional methods against quantum computers, you learn a thing everyday. However makes me wonder if this has something to do with the fact quantum computers are simply currently too slow; I wonder if they will be the same once we get true quantum supercomputers.

Oscar2

There seems to be a theoretical limit to any Moore’s Law-type trend that will apply with quantum circuitry. And Moore’s Law is already exhausted for classical circuits without even needing a theoretical reason to be exhausted. There’s no reason to think spatial density of switches is a chief concern for the efficiency of quantum computers. Unless you’re inferring some analogous exponential scaling trend for the size of dilution refrigerators, which would make more sense.

Norbert Biedrzycki

You’re taking an arbitrarily purist stance on what is a “computer” though. For instance, a computer lets people play video games, which inherently require an input and output device to meet the requirement of “play”, and a video monitor to be a video game. An abacus will not allow a person to play a video game, they could calculate the necessary algorithms to perform the game functions, but that’s not playing the game.

tom lee

.. and great article

tom lee

Scientists May Have Found a Way to Combat Quantum Computer Blockchain Hacking – The principle is based on Zero-knowledge proofs which allow you to validate information without sharing it.

https://beta.ourmedian.com/files/1523/consensus—immutable-agreement-for-the-internet-of-value

TommyG

A quantum computer exploits quantum physics to rapidly uncover the right answer to a problem by sifting through and adjusting probabilities, while a classical computer will be burning up memory and time looking at each potential answer in turn.

https://www.futurity.org/quantum-computing-simulation-1799802/

johnbuzz3

Interesting, but what are the applications of this tech?

Norbert Biedrzycki

Quantum computing use cases

TomK

IBM Just Broke The Record of Simulating Chemistry With a Quantum Computer

Last year, Google engineers simulated the bonding of a pair of hydrogen atoms on its own quantum computer, demonstrating a proof of principle in the complex modelling of the simplest arrangement of energies in molecules.

Molecular simulations aren’t revolutionary on their own – classical computers are capable of some pretty detailed models that can involve far more than three atoms.

But even our biggest supercomputers can quickly struggle with the exponential nature of keeping track of quantum interactions of each new electron involved in a molecule’s bonds, something which is a walk in the park for a quantum computer.

https://futurism.com/ibm-just-broke-the-record-of-simulating-chemistry-with-a-quantum-computer/

johnbuzz3

Chemistry is one of the first commercially lucrative applications for a variety of reasons. Researchers hope to discover more energy-efficient materials to be used in batteries or solar panels. There are also environmental benefits: about two percent of the world’s energy supply goes toward fertilizer production, which is known to be grossly inefficient and could be improved by sophisticated chemical analysis.

Norbert Biedrzycki

https://www.ibm.com/blogs/research/2018/10/quantum-advantage-2/

What the scientists proved is that there are certain problems that require only a fixed circuit depth when done on a quantum computer even if you increase the number of qubits used for inputs. These same problems require the circuit depth to grow larger when you increase the number of inputs on a classical computer.

Tom Jonezz

It seems with each new major development in computer technology, the steps climbed are progressively steeper, and the complexities become even more overwhelming. From bits and bytes, transistors and currents switched on and off, to qubits and quantum states doing computing and saving data with atoms and molecules, the last industrial revolution crawled in its development compared to the last 50 years of the digital revolution. Thank goodness, in this case, at least, the same company that’s building the ultimate hacking tool is simultaneously working on a set of algorithms beyond its reach.

johnbuzz3

If quantum works, Blockchain is the least of your worries, the whole financial system infrastructure have to be reworked.

Norbert Biedrzycki

Very true. But it will take years. Blockchain reminds me of internet 10 years ago

CaffD

Depends on where you’re starting from… we taught from “Computational Complexity” by Papadimitriou and “Introduction to the Theory of Computation” by Sipser. The latter is probably fine if you don’t have a ton of CS knowledge from the get-go. Computational Complexity: A Conceptual Perspective has free drafts online, that’s another good book.

Here is a good overview of the most interesting aspects, including quantum stuff.

Nothing beats a course in it though. See if a university near you will let you audit a class, most profs don’t care

Norbert Biedrzycki

The risks that quantum computers pose to existing cryptographic schemes are well understood, and are being designed against for the future. We’ll be fine. https://en.wikipedia.org/wiki/Post-quantum_cryptography

Tom Jonezz

Current cryptography depends on math problems that might take too long for anyone to even bother wasting computer resources on, but they’re solvable. And the bad news is that quantum computers with 1,000-qubit power could solve them in moments, not ages. The current public- and private-key encryption and digital signatures are based on megabyte-size algorithms. (For those who might be interested in the mathematical reason why cryptographers say quantum computers will be able to easily handle this kind of secret coding, do a search for something called Shor’s Algorithm.)

Andrzej44

IBM sees quantum computing going mainstream within five years

IBM predicts five technologies that will change the world in the next five years.

From quantum computing to “unbiased” artificial intelligence, CNBC breaks down what the predictions will mean.

https://www.cnbc.com/2018/03/30/ibm-sees-quantum-computing-going-mainstream-within-five-years.html

Norbert Biedrzycki

Inside of a quantum computer

Tesla29

This is an exciting time to combine machine learning with quantum computing. Impressive progress has been made recently in building quantum computers, and quantum machine learning techniques will become powerful tools for finding new patterns in big data.

johnbuzz3

Most quantum algorithms are probabalistic. Which means, that after you run the algorithm, you would have a 99% chance of your result being correct. You could run it longer and have your chance of being correct grow closer and closer to 1. We can always run deterministic (classic) algorithms in parallel that “verify” the result. Just have your quantum algorithm regularly output its best guess to a classic computer which checks to see if the answer is in fact correct.

CaffD

vary valid point. Thank you. Compex topic but clearly explained

DDonovan

To be worried that some future robotic intelligence will watch videos of Spot getting kicked and be upset is naive. Why should an intelligence relate itself to a machine, thereby debasing itself to that level?

Perhaps there is an argument that treating a machine that resembles an animal poorly has ramifications to people to people or people to animal interactions. However, there is no need to protect a robot besides the policies already in place for protecting machines and property.

John Accural

So far, the smartest AI ever made was Watson. Watson is an IBM AI that is capable of answering many questions and learning answers to questions. Pi is infinite, no one has solved it. The Quantum Computer would need to know the answer to Pi before being released, which no one knows. It also needs to know what happened before the “Big Bang” happened. (The Big Bang is the creation of the galaxy, scientists say.)

Don Fisher

Can somebody explain the technical difficulties and what means that the program has to be runned in one step? I imagine it has to do with the collapse of the wave function, one need to has the superposition of entangled states to have the qbits running, is that right? and what about decoherence? that has to do with the life time of the wave function in the superposition state? It is not clear to me neither this thing of the ‘one step’. What I’m going to say is perhaps just stupid, but if you can make an algorithm that arrives at the solution on only one step, why do you need a quantum computer to run it? because of the volume of data in the input?

TomCat

Qubits are exposed to a lot of noise from the environment (like electromagnetic waves). Noise can change the state of a qubit, perhaps moving a qubit that was in state 3 to state 3.01: 51% 0, 49% 1. You might think of this noise as ‘partial measurement’, because it changes the state of the qubit, but only sorta.

What you are saying, is that scientists are able to track this partial measurement as it occurs to their qubit. So, if they know they prepared a qubit in state 3, they can watch the noise that happens to it and know that its been transferred to a different state. Sounds simple 🙂

TomHarber

Great, but how exactly that helps us? How do we know which combination of data, algorithms, statistics … will be the right one, unless we already know the answer, or already have an algorithm that can crack the problem? It made sense to me, that we could model a molecule with it, but i still do not see, that just because a particle has a large set of opportunities, why it is way more efficient than some special architecture computer. Computing power?

Karel Doomm2

One calc step = one qubit. I suppose it’s one step in the sense that you only read the inputs once. But actually, you probably need to perform the quantum operations many times to get a significant result. When the term one step was used in the previous posts, I think it refers to a superposition that is being processed – not just a “position”. In fact, even the simplest quantum algorithms require a setup and a read – two steps.

TonyHor

The major goal in quantum computing is to be able to store and manipulate “qubits”. A single qubit able to be in 2 states at the same time. In some devices, it can be in more than states than that. But a collection of qubits can be in a large number of states. For example, 6 qubits can code could be in 64 possible combinations. Perhaps the most well-known quantum computing algorithm is “Shor’s Algorithm”, which factors large composite numbers for RSA encryption. But quantum computers will not be suitable for all computation problems. For example, the user interface to a quantum computer will be a normal computer.

Jacek B2

Very valid point

Norbert Biedrzycki

Interesting recent development:

China has beaten the world at building the first ever quantum computing machine that is 24,000 times faster than its international counterparts.

Making the announcement at a press conference in the Shanghai Institute for Advanced Studies of University of Science and Technology, the scientists said that this quantum computing machine may dwarf the processing power of existing supercomputers.

Researchers also said that quantum computing could in some ways dwarf the processing power of today’s supercomputers.

http://indiatoday.intoday.in/story/worlds-first-quantum-computing-machine-build-in-china/1/944280.html

Adam Spikey

Interesting article. But it’s not only about speed of data processing but flow of data and moreover outcome of analysis. For that we need open-minded human brain

Adam Spark Two

If we were to engineer an organic brain and program it with simple impulses such as to feed, survive etc and eventually grow, self improve and reproduce (providing it’s physical form was advanced enough to allow these processes to take place) it may be accurate to describe it’s response to stimuli as emotion. However once it reaches a state where it is self aware and experiences the world around it in a conscious way it would not be accurate to call it a machine, more of an engineered life form.

DDonovan

Can sb tells me what programming language is used for quantum computing?

JohnE3

There is no special “language”. So far everything is very low level, so you can use whatever language you want.that can create the appropriate sequence of actions and commands. Most people will probably use Matlab or Python simply because they are the most common languages for this type of task. D-Wave has written their own software interface for their hardware that can then be called from another programming language. Hope this helps 🙂

Check Batin

Are you sure? What about “R” and machine learning applications?

TommyG

… and there are lots of problems that could be addressed using quantum comuters. E.g.; quantum chemistry and biology. D-Wave sells recent state-of-the-art machines. These computers already work and can already solve real-world problems. However, they should excel for certain optimization problems although so far they all circuits are so small that they can only be used for small problem. But again, we should know in a just a few years whether or not they will be of any practical importance.

Norbert Biedrzycki

More practical application of this technology is developing: propensity modelling, cyber security, behavioural analysis. Just to mention few of them

TommyG

Interesting article. But, no question that quantum computing is “real” in the sense that we know that it works and we can already solve (small) problems. However, whether or not a “general purpose” quantum computing will ever be of practical importance is still an open question. However, what is somewhat more certain is that the technology for quantum simulations will reach a point where it will be useful in just a few years. I believe so. But what we need is a set of algorithms to run on quantum machines

Don Fisher

This is complex, to develop such a mathematical procedures. That’s why quantum computers are gonna be limited to the labs only

CaffD

Now I understand the very basics of quantum computing – that it is based on qbits, which in turn exploit the phenomena of entanglement and superposition to perform calculations that would be impossible to execute in binary systems. But I have also read that there is scepticism as to whether a working quantum computer can be built, because of the ‘problem of de-coherence’, which poses a huge technical challenge. Some scientists e.g.; quantum physicist like for example Scott Aaronson, are highly sceptical. So is the quantum computer hype? Or is it real?

TonyHor

This is a bit too simplified. If there are several qubits entangled, their entire arrangement has a probability distribution and the entire thing becomes determined at once. That’s what in fact, gives the QC its potential. Let’s consider that a system of 500 qubits could instantly settle into one of the 2500 ~ 3*10150 possible combinations. A lot. That’s the computation power we are looking for

johnbuzz3

Big IT companies are trying hardly to develop quantum computers because using the weirdness of quantum mechanics should inrease data-processing capacity. I personaly believe quantum computers could make AI software much more powerful. NASA hopes quantum computers could help schedule rocket launches and simulate future missions and spacecraft. I think that among other technologies developing right now it’s a truly disruptive technology that could change how we do a lot of things

Norbert Biedrzycki

Correct. Disruptive but limited to highly specialized algorithms. Mainly based on probability. We are still lacking of them. Also algorithms requires more steps to set up the entanglements and quantum state before the system of qubits is “measured”. Then there are other steps before and after the quantum processing to get the data into and out of the form used to set up the quantum state. Again based on probability