Programmers involved in developing algorithms for use in autonomous vehicles should prepare to have their work become the subject of a heated ethical debate rather soon. The growing popularity of self-driving cars is already giving headaches to lawyers and legislators. The reason is the complexities of what is right and wrong in the context of road safety.

Self-driving cars are enjoying increasingly better press and attracting a growing interest. Industry is keeping a close eye on the achievements of Tesla and Google. The latter has been releasing regular reports showing that autonomous vehicles are clocking up ever more miles without failing or endangering traffic safety. A growing sense of optimism regarding the future of such vehicles can be felt all across the automotive industry. Their mass production will soon be launched by Mercedes, Ford, Citroen, and Volvo.

Nearly every major manufacturer today has the capacity to build a self-driving car, test it and prepare it for traffic. But this is only a start. Demand for such vehicles and the revenues derived from their sale will continue to be limited for a while due to the complex legal and… ethical questions that need to be resolved.

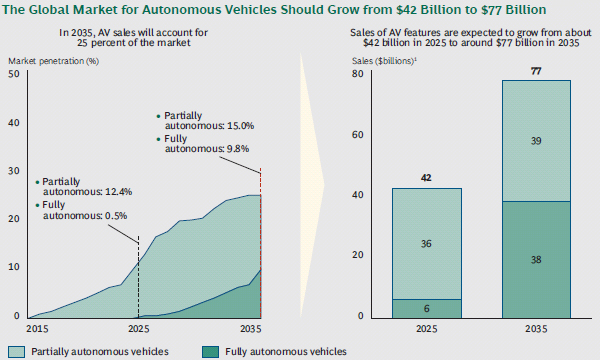

Autonomous cars market share will reach 13% of all vehicles on the road by 2025, with a worth of $42 billion, and reach $77 billion by 2035, accounting for 25% of all cars. Source: BCG

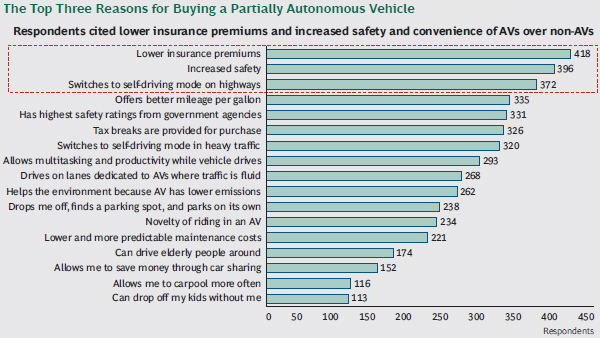

Top three reasons for buying fully autonomous vehicle. Source: BCG

Algorithms of life and death

An example is now in order. Picture a road with a car driven by a man. Next to him sits his wife, with two kids in the back seat. Suddenly, a child chasing a ball runs onto the road. The distance between the child and the car is minimal. What is the driver’s first instinct? Either to slam the brakes on sharply, or veer off rapidly… landing the car in a tree, on a wall, in a ditch or, in the worst case scenario, mowing down a bunch of pedestrians. What would happen if an autonomous vehicle faced the same choice? Imagine that the driver, happily freed of the obligation to operate the vehicle, is enjoying some shuteye. It is therefore entirely up to the car to respond to the child’s intrusion. What will the car do? We don’t know. It all depends on how it is programmed.

Briefly put, in ethical terms there are three theoretical programming possibilities that will largely determine the vehicle’s response.

In one of them, the assumption is that, what counts in the face of an accident and a threat to human life is the joint safety of all people involved (i.e. the driver, the passengers and the child on the road).

An alternative approach puts a premium on the life of pedestrians.

A third one gives priority to protecting the life of the driver and the passengers.

The actual response depends on the algorithm selected by a given car maker.

Recently, a Mercedes representative stated that his company’s approach is to value the safety of autonomous vehicle passengers the most. OK, but would programming a car in such a way be legal? Probably not. It is therefore difficult to view such declarations as binding. However, this does not make the liability for vehicle algorithms any less critical. And somebody will have to bear that liability.

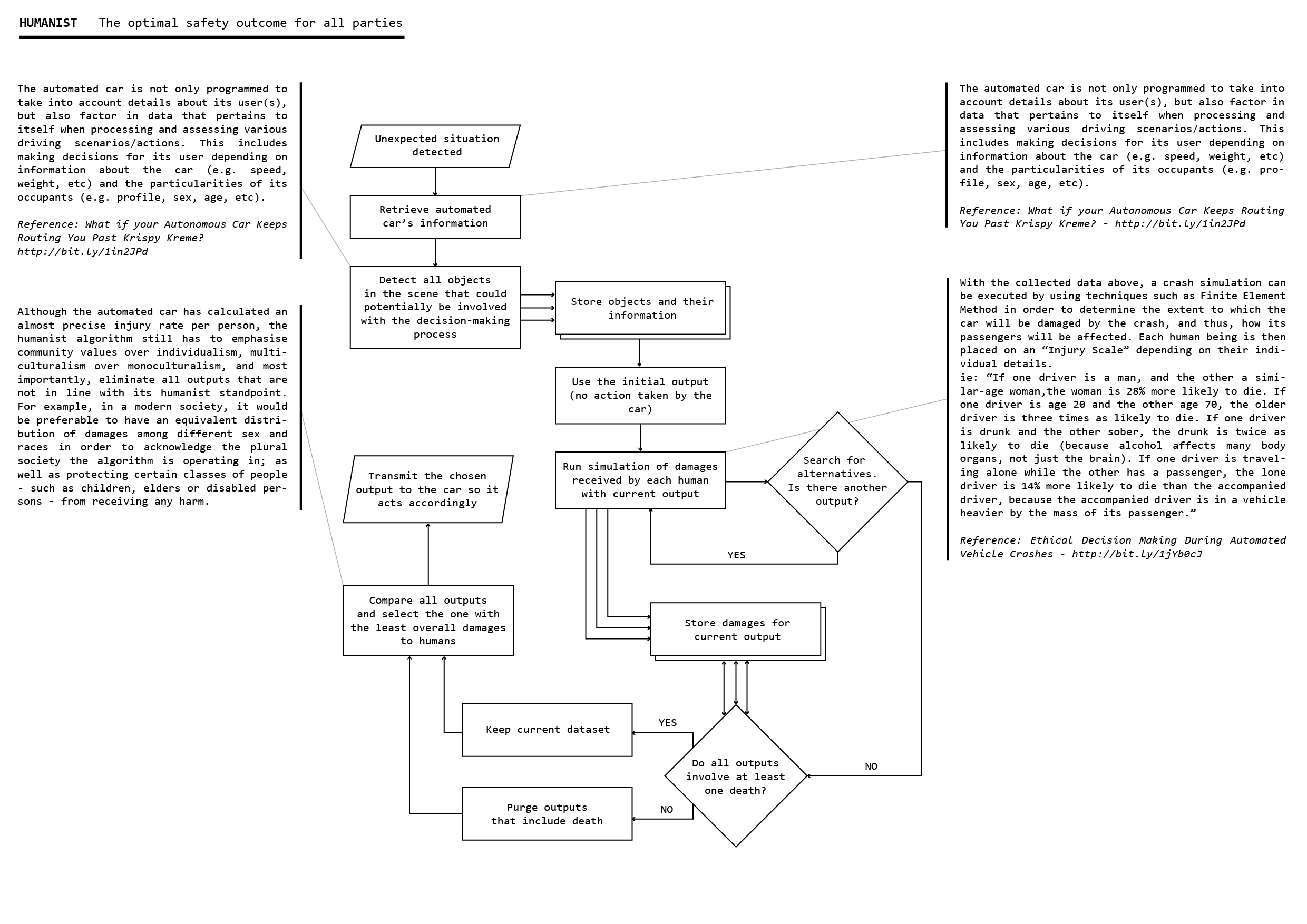

Autonomous car accident decision algorithm. Option: the optimal safety outcome for all parties. Source: MCHRBN.net

Autonomous car accident decision algorithm. Option: the optimal safety outcome for all parties. Source: MCHRBN.net

The research that kills demand?

It may also be interesting to go over the opinions of prospective self-driving car buyers. Studies on accidents and safety have been conducted in several countries. The prestigious Science magazine quotes a study which involved slightly over 1,900 people. Asked whose life is more important in case of an accident: that of the passengers or that of the passers-by, the majority of the respondents pointed to the latter. However, responding to the question of whether they would like this view to become law, and whether they would purchase an autonomous vehicle if this was the case, they replied “no” on both counts!

If these examples are indeed emblematic of today’s ethical confusion, corporations cannot be very happy.

Until prospective buyers are fully certain what to expect when a self-driving car (which in theory should be completely safe) causes an accident while they are behind the wheel, one can hardly expect the demand for such products to pick up. People will not trust the technology unless they feel protected by law. But will lawmakers be able to foresee all possible scenarios and situations?

Dashboard of futuristic autonomous car – no steering wheel. Nissan IDS Concept. Source: Nissan

The future is safer

One can hardly ignore the extent to which modern technology itself increases our sense of safety. We can be optimistic about the future and imagine a time when almost all vehicles out there are autonomous. Their internal electronic systems will communicate in real time on the road and make cars respond properly, also to other road users. Safety will be ensured by on-board motion detectors and radars which will adjust vehicle speed to the velocity of the traffic and accordingly to general surroundings.

Even today, autonomous vehicle manufacturers love to invoke studies which show that self-driving cars will substantially reduce accident rates. For instance, according to the consultancy KPMG, by 2030 in the United Kingdom alone, autonomous vehicles will save the lives of ca. 2,500 accident victims. Unfortunately, such projections remain purely theoretical. Clearly, roads will for a long time still be filled with conventional vehicles without electronic drivers.

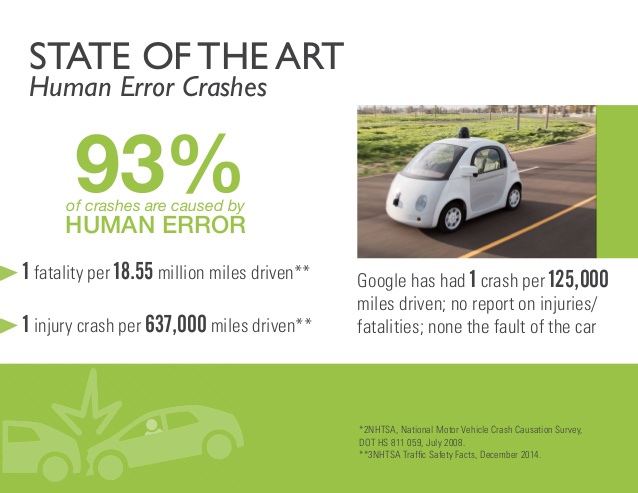

95% of crashes are caused by human error. Source: Google

Time for changes

By and large, I personally believe that technology will benefit us all. However, the need for regulation and ethical issues will never go away. As of now, the development of the legal framework has a long way to go. Considering that new technologies tend to catch us by surprise with their speed of development and how rapidly they change reality, I am not sure we can defer certain decisions any longer.

Related articles:

– Machine Learning. Computers coming of age

– Artificial Intelligence as a foundation for key technologies

– Artificial Intelligence for all

– End of the world we know, welcome to the digital reality

– The brain – the device that becomes obsolete

– On TESLA and the human right to make mistakes

Oniwaban

Right. AI is nothing what people make it out to be. It doesn’t have self-awareness, nor can it outgrow a human. Up until this day there has never been a program demonstrated that can grow & develop on its own. AI is simply a pattern, or a set of human made instructions that tell the computer how to gather & parse data. GPT-3 (OpenAI) works very similar to a Google search engine. It takes a phrase from one person, performs a search on billions of website articles and books to find a matching dialog, then adjusts everything to make it fit grammatically. So in reality this is just like performing a search on a search, on a search, on a search, and so on….

Adam Spikey

Hi Norbert, How much space do I have to respond? We should be extremely concerned and I am going to write short concerns and then several links and two by Paul Michelman from Editor In Chief, MIT SLoan Management Review. Paul is on the network and you may want to reach out to him. Concerns: No Global Governance, No International Laws, Tech Companies trying to influence policy makers, Policy makers may be unclear or unfamiliar with complexity of problem to write policy, AI Mind Labs harvesting data off of sites from people that are unfamiliar they are being studied, Technologists changing human biology and we are giving coders a key to humanity, Cyborg technology is now live with the interface with the brain, Cyber attacks brought upon society like a Perfect Storm with AI technology, Under reporting of cyber attacks due to lack of controls and ransom is paid by companies. There is more… Have a great weekend.

John Accural

The ultimate question is whether the cars should swerve into a wall (killing it’s passengers) to avoid colliding into a pedestrian who had suddenly stepped into the path of the car or continue on it’s path to kill the pedestrian when there was no way to stop in time.

I chose continue on to kill the pedestrian because otherwise people could abuse the system and kill people by intentionally stepping into roads and causing self driving cars to swerve into accidents.

Adam T

It should be noted that no ethically-trained software engineer would ever consent to write a Destroy_Baghdad procedure. Basic professional ethics would instead require him to write a Destroy_City procedure, to which Baghdad could be given as a parameter.

Adam Spikey

https://www.lawgeex.com/AIvsLawyer.html?utm_source=google&utm_medium=cpc&utm_campaign=Global_sch_ai_vs_lawyers&utm_adgroup=56678748910&device=c&placement=&utm_term=%2Bai%20%2Blawyer&gclid=EAIaIQobChMI8c7lgLfy3gIVCYuyCh2fzgybEAAYASAAEgJv5fD_BwE

Guang Go Jin Huan

Right. Once something like AI is turned on, and if intelligence and consciousness are something can be created in a non-biological way, we have no way to stop it. It can gain access to weapons or manufacturing. Or it can simply manipulate people with such efficiency we won’t even realize it is being done. It quickly becomes so smart no one understands it anymore and it can do things I can’t even imagine or make up in this reply, because I am a meat bag.

I agree that this seems like sci fi garbage magic…but…Elon Musk and Stephen Hawking are not Alex Jones. They work with some of the smartest people on earth, probably have access to high level research the public doesn’t see, and they seem to be worryingly preoccupied with it. I personally don’t see how we get from modern supercomputers to a real AI with present tech, but that doesn’t mean a team in some high-level laboratory isn’t close to a breakthrough.

Maybe we can design an AI slave where we put limitations on what it can do and how smart it can get and force it to work for us. However, once we have it what stops other people from designing their own with less efficient safeguards. For an AI to be useful it has to be smarter than us, otherwise what is the point?

However, I personally hope we all become cyborgs and merge with the machines, just like the shitty Mass Effect 3 ending.

JohnE3

In 2010 British Robotics funding agency, the EPSRC, work on ethical issues in robotics. The five principles are:

– Robots should not be designed as weapons, except for national security reasons.

– Robots should be designed and operated to comply with existing law, including privacy.

– Robots are products: as with other products, they should be designed to be safe and secure.

– Robots are manufactured artefacts: the illusion of emotions and intent should not be used to exploit vulnerable users.

– It should be possible to find out who is responsible for any robot.

DDonovan

The words “intelligence” and “self awareness” get thrown around too much with regard to robotics. It implies some sort of magic. Sure, perhaps at some point we’ll have a level of sophistication for which some form of intelligence develops or emerges but we are far from that. Mimicry is not intelligence.

John Accural

ML will be used only if you have an unlimited amount of tries or have a large amount of historical data

John McLean

Robot need civil rights too: https://www.bostonglobe.com/ideas/2017/09/08/robots-need-civil-rights-too/igtQCcXhB96009et5C6tXP/story.html

Mac McFisher

The construction of intelligent robots will come packaged with a slew of ethical considerations. As their creators, we will be responsible for their sentience, and thus their pain, suffering, etc

Adam Spikey

This is what I’ve been saying all along. Every individual Earthling, not just humans and AI, is a valuable component in the larger system, as each one of us has a unique set of skills, interests, and perspectives. To successfully achieve the goal of life (spreading itself outward across time and space) diversity is needed, not homogeneity.

We biological organisms need synthetic ones and vice versa.

TomHarber

We keep having this discussion of “Ethics” in programming vehicles, but the reality of the situation is that is NOT how programming works at all.

Car is driving, it detects an object ahead on the sidewalk moving with a collision course with the vehicle. The car already has in its memory the location of all other moving objects around it and determines if a lane change + braking, braking, swerve, or an simple downshift will be enough to avoid contacting the object(s).

The car doesn’t start evaluating if a kid is going to be hit or if it should suicide you into a wall, it will not do that.

It’s programmed to do so. So, programmers are responsible for the code -> for a car bahviour

TonyHor

I don’t want to be dependent so much on machines

DDonovan

For sure, 2016 was the year of AI. Innovations such as big data, advances in machine learning and computing power, and algorithms capable of hearing and seeing with beyond-human accuracy have brought the benefits of AI to bear on our daily lives. By working together with machines, people can now accomplish more by doing less. But machines left alone are struggling with ethical issues unless instructed. What is the future? See like E. Musk is avocation against super-AI. Despite he’s driving the whole auto industry revolution with his Tesla

TommyG

We need laws to change to determine whether the designer, the programmer, the manufacturer or the operator is at fault if an autonomous machine is responsible for an accident. Autonomous systems must keep detailed logs so that they can explain the reasoning behind their decisions when necessary. This has implications for system design: it may, for example, rule out the use of artificial neural networks, decision-making systems that learn from statistical examples rather than obeying predefined rules.

Check Batin

This all assumes that for example the decision making process would be similar as in human driver cases. It’s not. When humans drive prevention doesn’t have a very significant role. We can make it to have a significant role in autonomous driving situations. AI can and will observe situations which a human driver can never observe or learn.

TomK

God great there are people here with the cognitive chops to have a productive conversation on this topic.

CaffD

Interesting read. Important ethical problem. Who is gonna be resonsible for theis mistakes? Manufacturer? What if machines will design next wave of machines?

John McLean

And the what? What will be the place for us?

TomCat

Can a robot truly make a good choice about etical issues like defending the country e.g.; taking someone’s life? Not without a huge amount of information, processes, procedures being input. Can a robot handle that? Absolutely. And this is programmable even right now. I think easilly. Can a robot handle that autonomously? Without any human or computer interaction? Yes, surelly. Can a robot, completely on its own, decide the fate of a human being based on these things and not a simple hitlist? This scares me.

TomK

Volvo goes full and only elecric from 2019 https://futurism.com/volvo-becomes-the-first-premium-car-maker-to-go-all-electric/

John McLean

… and I wonder when the rest will follow. China is becoming the leader in eco cars. Scale?

Simon GEE

When I was learning I drove in the downtown during rush hour one day. There was a busy intersection and so I waited for the other side to clear before I went through. As I did, someone from the side road did a red right turn to take up the spot that had cleared, leaving me stuck in the middle of the intersection for the entire light, blocking all the traffic. It was some of the most ridiculous driving I’ve seen and it was like my 3rd time driving ever.

Also, last night I saw someone who started going when the light turned green but were so slow that they were the only person to make the light from their lane.

Adam Spikey

AI can create diversity too, and maybe faster than us. If our “diversity” slows down AI progress to reach greater diversity, then we might become less desirable as we would force the diversity generation to be less optimal.

Simon GEE

The most interesting ethical dilemmas specifically concern robotization. The questions are analogous to those asked with regard to autonomous vehicles. Today’s robots are only learning to walk, answer questions, hold a beverage bottle, open a fridge and run. Some are more natural than others at these tasks. Robots will not only replace us in many jobs. They can really be helpful, in e.g. taking care of the elderly, where constant daily assistance is required.

TomK

That is why politician should talk about fundamental change of education system not about taxing robots. The problem is not robotization but that after 16 Y of hard work at school our kids will have to compete with robots as they learn there only what machine can easly do after 2 weeks of being programmed !

Simon GEE

It seems like there is no field of science that Watson doesn’t have the ability to revolutionize. People on the futurology community have been talking up AI for so long and it’s finally coming to fruition. Our society is going to experience profound increases on productivity when this technology is cheap and ubiquitous.

Adam Spark Two

What are emotions? What is wrong, what is right? What is the difference? Emotions root from our memories and experiences that shape the way we create and perceive these emotions. All human beings experience emotions differently, the common ground between us all is that the emotions we fabricate for ourselves seem real to us -and us alone. As an individual, I cannot experience the emotions that another has created, only my own reaction to them. If the machine perceives its reactions as emotions, and real to itself, then cannot it be said that the machine is feeling emotions just as you or I would? Has this machine not reached a level of being that is that we could consider to be ‘human’?

Karel Doomm2

When it comes to a generalized AI, I think we don’t have a well enough defined problem to know exactly what class of problem we’re trying to solve. Neural networks and a few other passes are all “learning” tools that are vaguely analogous to neural function, but that’s all they are, not any real representation of the brain. I think this area of neuroscience is still kind of in the inductive reasoning stages. People have come up with many clever, mathematically elegant tools that can output similar information as the brain with similar inputs, but it’s still kind of “guessing what’s inside the black box.”

Check Batin

“Autonomous cars are ‘the vaccine that will cure deaths on the road’, says E. Musk – “For each day that we can accelerate connected and autonomous vehicles, we will save 3300 people a day.”

DDonovan

might be right but who will control them when gets smarter?

Robert Kaczkowski

We, the people. We need legistlation in place to control AI’s

JohnE3

I do not believe in theses numbers. Should be much bigger

John Accural

IMHO there is no reason for the car to make moral judgments. All it has to do is calculate the minimum damage possible, simple as that. Realistically speaking, all these three mentioned scenarios are all nonsense. Because fact of the matter is, if we had true self driving cars in cities, these kind of deaths would be 0. I can’t imagine a scenario where at 25 – 30mph the self driving car can’t figure out a way to minimize damage to 0. The stopping distance from 30mph is only 75 ft. Then you subtract the thinking distance (30ft) giving you a total of only 45 ft. This is a significant difference for a victim.

Check Batin

But it’s still an important issue quite well described in this article. Crash above 45mph is always fatal

Simon GEE

In majority of the cases – 80% fatal

Guang Go Jin Huan

This technology will be funded by the wealthiest individuals in the world. Therefore, it’s their own world views, philosophies and morality that will be reflected in how these AI’s work. This fact should be profoundly disturbing to any rational human being, rich or poor, capitalist or communist, gay or straight, atheist or religious.