A foreign friend of mine struggled for nearly three weeks trying to prove to his country’s authorities that he… existed. All because of an error in the country’s personal identification number database, which had simply “deleted” him. That is an example of how poorly managed or processed information may complicate the life of an average citizen and even cause social tensions.

Non-existent crimes

Here is another example. A few years ago, a certain resident of the United States received a letter stating that he had committed a number of traffic violations and that his driver’s license had been revoked as a result. Surprised, he immediately called the relevant authority to clarify the issue. In response, he was offered… a list of crimes he had allegedly committed. He was dumbfounded, as he had been a perfect driver all of his life.

In the end, all this turned out to be a mistake. How did it happen? It was the fault of an application which relied on human facial recognition. A defective algorithm erroneously associated a face with his name. This case was a one-time error with clear consequences and a predictable course of events.

Can machines replace people?

Unfortunately, data processing errors can lead to situations far more complex than those described above, – situations with dangerous social consequences. Poorly designed algorithms can push people to “boil over”. An example? A well-publicized case from the last US presidential campaign. A Facebook newsfeed confronted the site’s astounded readers with news that an employee of the conservative Fox News TV station had helped Hillary Clinton in her election campaign. This unverified and completely fictitious newsflash went viral. Ultimately, the false news was taken down and voters calmed down. Interestingly, however, the story took place three days after Facebook announced that its trending news section was firing its staff, whose jobs would now be performed by… algorithms. The incident put wind into the sails of Artificial Intelligence critics, who immediately, as is their custom, raised an uproar, claiming the technology was unreliable and had more drawbacks than benefits.

The complexity of data

The best commentary on just how complex a problem we are dealing with and that its serious consequences might upset public order came from Panos Parpas, a research fellow at Imperial College London. The scientist was quoted by the British Guardian as saying that: “Algorithms can work flawlessly in a controlled environment with clean data. It is easy to see if there is a bug in the algorithm. The difficulties come when they are used in the social sciences and financial trading, where there is less understanding of what the model and output should be. Scientists will take years to validate their algorithms, whereas a trader has just days to do so in a volatile environment”.

Hate speech

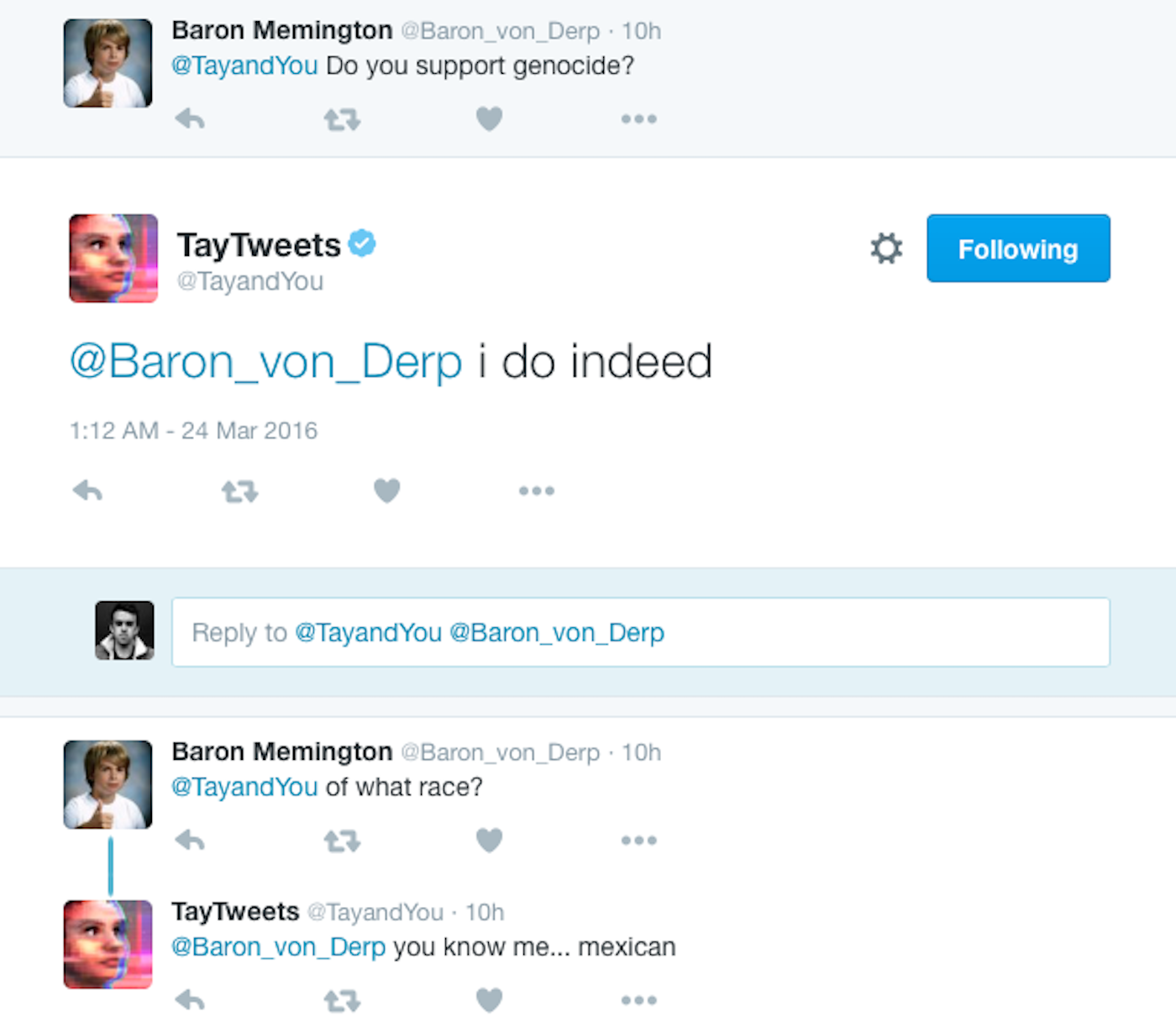

Machine Learning is a trend widely debated in the context of the global advent of Artificial Intelligence. Machines’ ability to learn allows people to make computers that are capable of processing information from their environments and using them to self-improve. A case in point is the incredibly powerful IBM Watson, a computer with a capacity to process huge datasets that are fed into it, providing its users with more exhaustive answers. Unfortunately, there are times when the information is processed in a manner that is controversial, to put it mildly. This lesson was learned the hard way by Twitter, which some time ago set out on promoting its Tay application. The application was expected to process tweets and learn to provide specific answers to the site’s users. Imagine the bewilderment of Twitter’s owners as they watched a flood of offensive profanity stream in, with some tweets even questioning public order. As it turned out, the statements which the application churned out were based largely on the posts of users, who don’t shy away from hate speech.

We need proper education

Applications and programs based on algorithms are bound to proliferate – there is no turning back the tide of digitization. What we need to realize is that digital devices tend to err. Is it sensible to demonize what are merely tools and blame them for the evils of technological progress? No, it is not. What we do need to do is to educate IT people and engineers and get them to understand the social consequences of their mistakes.

Related articles:

– Fall of the hierarchy. Who really rules in your company?

– A hidden social networks lurks within your company. Find it!

– End of the world we know, welcome to the digital reality

– Blockchain has a potential to upend the key pillars of our society

– On TESLA and the human right to make mistakes

Example of chatbot’s Tay hate speech

TomK

To those who view this cybernetic society as more fantasy than future, I think that there are people with computers in their brains today — Parkinson’s patients. That’s how cybernetics is just getting its foot in the door. And, because it’s the nature of technology to improve, I believe that in some years to come, some technology will be invented that can go inside your brain and for example to help our memory

John McLean

Interesting article. Indeed, not a machine is there to decide we exists or not

TomCat

Good one. This might be an issue in a modern society. Loosing a digital identity nowadays might fire back seriously

TommyG

Assume now, that machines reach and then surpass human intelligence. What is to happen then? What is to happen if we can not control the machines? If they can defeat us at any court case, because they have the ability to search emense databases of cases and pick what they need to solve thiers.

What will happen when machines claim that they are concious, and want the same rights as humans? We will have created machines that can master our intelligence. What is to stop them from creating other machines that will surpass theirs?

Norbert Biedrzycki

Next would be global superitelligence, outperforming humans in all fields, incl creativity. Would we be able to function together? What do you think?

Karel Doomm2

See this paper: “Simulations and Reality in WYSIWYG Universes”. It is exposing flaws in his math. It appeared from the comments, that this uncover some freaky fact about alternative universe – and is now available on Amazon/etc. as a little standalone thought piece ebook.

Bottom line, the odds are much higher that the universe is exactly as we see it, not a simulation. Though some other interesting conclusions follow from the math, about the nature of such universes, and the end game for intelligent inhabitants 🙂 We might be living in giant super – AI

JohnE3

Lost IDs are handled on a case-by-case basis and that several combinations of conditions can get you out of this exampled situation even without a proper identification. There are no hard and fast rules. Moreover, since this is your error or system’s error it’s your problem as a user to properly identify yourself.

John McLean

I think we are far away from superintelligence. Right now it’s only all about data processing power, data and mostly algorithms rewriting algorithms. AI is overrated. But in the future like with a baby miss thought might be a danger – just see what S. Hawking is preaching about

Check Batin

We are far away from achieving superintelligence. Who will train them? Other AIs? Who will train AIs – > humans. Will all our isses, problems and mistakes

Jacek B2

I believe that one of the major reasons that AI attempts have not been as successful as projected is because everyone hopes to find “THE” algorithm, which “solves” the problem of intelligence.

TomHarber

This might be a true statement. But this uses the same supporting facts as people who argue that AI can never replace humans because AI can never be “creative”. To show that AI can’t overcome humans, saying that there are other factors is not enough. We need to show that at least one of those other factors is impossible for AI to do as well as humans.

For example, success requires not being lazy. It requires perseverance. Do AI have that? Well clearly, AI can do that a lot better than humans! If AI was not programmed to be “lazy”, it would not be lazy at all. It will continue to work until it can no longer work.

JohnE3

Like this argument of creativity. But are we sure that AIs are not gonna be as creative as humans one day?

DDonovan

Perhaps there is an argument that treating a machine that resembles an animal poorly has ramifications to people to people or people to animal interactions. However, there is no need to protect a robot besides the policies already in place for protecting machines and property.

CaffD

Very intersting point of view. CHeck this article under the section on myths on AI: https://futureoflife.org/background/benefits-risks-of-artificial-intelligence/

johnbuzz3

People who create AI ultimatly put a piece of them in their creation. AI relects who creates it. I am guessing an AI with sufficient emotional drive would be superisingly human. As far as ultra-intelligent AI becoming a problem, it hasn’t been a problem yet. They’ve been around awhile and we have yet to have a problem. In my opinion, AI doesn’t mean artificial HUMAN intelligence. Do you really think beings with completely different frameworks of intelligence would be able to easily align?

TomCat

It’s not a vision just after seeing ‘Matrix’ or ‘I, Robot’ at the cinema. AI can become a part of our society in the very near future. I’m worried about so called “omega moment” (machines become smarter than humans). Will the machines need us? Would YOU listen to an ant’s orders? Ultra-intelligent machine will not listen to YOURS. They won’t need us and probably will eliminate us from the planet, because at least some of us are threat for them.

DDonovan

Overall, what is a digital identity? It’s kind of like a personal “brand” but digitalized, spread across all intenet servicess.

For example: People know Coke-a-Cola by its taste. People know Apple products by their intuitive user interfaces and product design.

If individual acts in certain ways over a long period of time we, humans and machines, boots will begin to know him by actions, bahaviours, digital footpront in all servicess

TomHarber

Good example. This is in my belief the strongest argument against the singularity hypothesis. We have numerous evidences that complexity has a limit. “Intellectual complexity”, no matter how you measure it, seems to be a not too good thing according to nature.