As its users, we have grown to take technology for granted. Hardly anything these days is as commonplace and unremarkable as a personal computer that crunches numbers and enables us to read files and access the Internet. Will computers ever amaze us again in any way? Some potential for amazement may lie in cognitive computing – a skill-set widely considered to be the most vital manifestation of artificial intelligence.

Back during my university days, and later at the outset of my professional career, I wrote software. I earned my first paycheck as a programmer. I often stayed up late and even pulled all-nighters correcting endless code errors. There were times when the code I wrote finally began to do just what I wanted it to, serving its intended purpose. In time, such moments became more and more frequent. I often wondered if programmers would ever be replaced. But how and with what? The science fiction literature I was into abounded with stories on robots, artificial intelligence and self-learning technologies that overstepped their boundaries and began to act against the rules, procedures and algorithms. Such technologies managed to learn from their mistakes and accumulate experience. It was all science fiction then. A computer program that did anything other than the tasks assigned to it by its programmer? What a delusion. But then I came across other concepts, such as self-learning machines and neural networks.

As it turns out, a computer program may amass experience and apply it to modify its behavior. In effect, machines learn from experience that is either gained directly by themselves or implanted into their memories. I have learned about algorithms that emulate the human brain. They self-modify in the search for the optimal solutions to given problems. I have learned about cognitive computing, and it is my reflections on this topic that I would like to share in this article.

As it processes numbers, a computer watches my face

All the existing definitions of cognitive computing share a few common features. Generally speaking, the term refers to a collection of technologies that result largely from studies on the functioning of the human brain. It describes a marriage of sorts of artificial intelligence and signal processing. Both are key to the development of machine consciousness. They embody advanced tools such as self-learning and reasoning by machines that draw their own conclusions, process natural language, produce speech, interact with humans and much more. All these are aspects of collaboration between man and machine. Briefly put, the term cognitive computing refers to a technology that mimics the way information is processed by the human brain and enhances human decision-making.

Cognitive computing. What can it be used for?

Cognitive computing emulates human thinking. It augments the devices that use it while empowering the users themselves. Cognitive machines can actively understand such language and respond to information extracted from natural language interactions. They can also recognize objects, including human faces. Their sophistication is unmatched by any product ever made in the history of mankind.

Time for a snack, Norbert

In essence, cognitive computing is a set of features and properties that make machines ever more intelligent and, by the same token, more people-friendly. Cognitive computing can be viewed as a technological game changer and a new, subtle way to connect people and the machines they operate. While it is neither emotional nor spiritual, the connection is certainly more than a mere relationship between subject and object.

Owing to this quality, computer assistants such as Siri (from Apple) are bound to gradually become more human-like. The effort to develop such features will focus on the biggest challenge of all faced by computer technology developers. This is to make machines understand humans accurately, i.e. comprehend not only the questions people ask but also their underlying intentions and the meaningful hints coming from users who are dealing with given problems. In other words, machines should account for the conceptual and social context of human actions. An example? A simple question about the time of day put to a computer assistant may soon be met with a matter-of-fact response followed up by a genuine suggestion: “It is 1:30pm. How about a break and a snack? What do you say, Norbert?”

Pic_1 How Siri works? Source: HowTechnologyWork

Dear machine – please advise me

I’d like to stop here for a moment and refer the reader to my previous machine learning article. In it, I said that machine technology enables computers to learn, and therefore analyze data more effectively. Machine learning adds to a computer’s overall “experience”, which it accumulates by performing tasks. For instance, IBM’s Watson, the computer I have mentioned on numerous occasions, understands natural language questions. To answer them, it searches through huge databases of various kinds, be it business, mathematical or medical. With every successive question (task), the computer hones its skills. The more data it absorbs and the more tasks it is given, the greater its analytical and cognitive abilities become.

Machine learning is already a sophisticated, albeit very basic machine skill with parallels to the human brain. It allows self-improvement of sorts based on experience. However, it is not until cognitive computing enters the picture that users can truly enjoy interactions with a technology that is practically intelligent. The machine not only provides access to structured information but also autonomously writes algorithms and suggests solutions to problems. A doctor, for instance, may expect IBM’s Watson not only to sift through billions of pieces of information (Big Data) and use it to draw correct conclusions, but also to offer ideas for resolving the problem at hand.

At this point, I would like to provide an example from daily experience. An onboard automobile navigation system relies on massive amounts of topographic data which it analyzes to generate a map. The map is then displayed, complete with a route from the requested point A to point B, with proper account taken of the user’s travel preferences and prior route selections. This relies on machine learning. However, it is not until the onboard machine suggests a specific route that avoids heavy traffic, while incorporating our habits that it begins to approximate cognitive computing.

Number crunching is not everything

All this is fine, but where did today’s engineers get the idea that computers should do more than crunch numbers at a rapid pace? The head of IBM’s Almaden Research Center Jeffrey Welser, who has spent close to five decades developing artificial intelligence, offered this simple answer: “The human mind cannot crunch numbers very well, but it does other things well, like playing games, strategy, understanding riddles and natural language, and recognizing faces. So we looked at how we could get computers to do that”.

Efforts to use algorithms and self-learning to develop a machine that would help humans make decisions have produced a spectacular effect. In designing Watson, IBM significantly raised the bar for the world of technology.

How do we now apply it?

The study of the human brain, which has become a springboard for advancing information technology, will – without a doubt – have broader implications in our lives, affecting the realms of business, safety, security, marketing, science, medicine and industry. “Seeing” computers that understand natural language and recognize objects can help everyone, from regular school teachers to scientists searching for a cure for cancer. In the world of business, the technology should – in time – help use human resources more efficiently, find better ways to acquire new competencies and ultimately loosen the rigid corporate rules that result from adhering to traditional management models. In medicine, much has already been written on doctors’ hopes associated with the excellent analytical tool – IBM’s Watson. In health care, Watson will go through a patient’s medical history in an instant, help diagnose health conditions and enable doctors to instantly access information that could previously not be retrieved within the required time horizon. This may become a major breakthrough in diagnosing and treating diseases that cannot yet be cured.

Watson has attracted considerable interest from the oncology community, whose members have high hopes for the computer’s ability to rapidly search through giant cancer databases (which is crucial in cancer treatment) and provide important hints to doctors.

Combined with quantum computing, this will become a robust tool for solving complex technological problems. Even today, marketing experts recognize the value of cognitive computing systems, which are playing an increasingly central role in automation, customer relationships and service personalization. Every area of human activity in which data processing, strategic planning and modeling are of importance, will eventually benefit from these technological breakthroughs.

The third age of machines

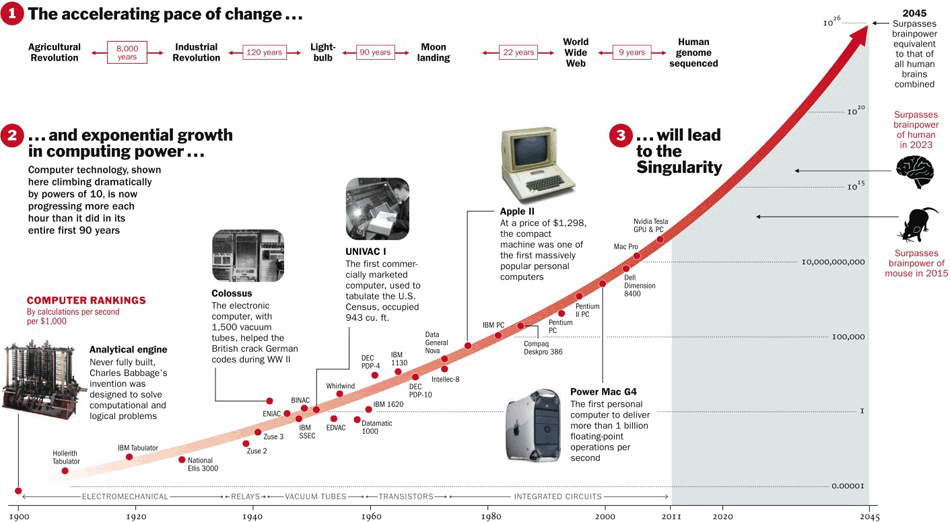

Some people go as far as to claim that cognitive computing will begin the third age of IT. Early in the 20th century, computers were seen as mere counting machines. Starting in the 1950s, they began to rely on huge databases. In the 21st century, computers learned to see, hear and think. Since human thinking is a complex process whose results are often unpredictable, perhaps we could presume that a cognitive union of man and machine will soon lead to developments that are now difficult to foresee.

Machines of the future must change the way people acquire and broaden their knowledge, to achieve “cognitive” acceleration. However, regardless of what the future may bring, the present day, with its ever more efficient thinking computers is becoming more and more exciting.

Exponential growth in technological capabilities will lead to Singularity. Source: Sneaky Magazine

Related articles:

– Only God can count that fast – the world of quantum computing

– Machine Learning. Computers coming of age

– The invisible web that surrounds us, i.e. the Internet of Things

– According to our computers … You don’t exist

– The lasting marriage of technology and human nature

– The brain – the device that becomes obsolete

TommyG

I’m a absolute noob at quantum computing, i.e. I know next to nothing about it, but is it true that this form of computation is only applicable for problems with check algorithms available, i.e. the solution can be verified?

Oscar2

Although it is hard to predict what the exact components of a future quantum internet will be, it is likely that we will see the birth of the first multinode quantum networks in the next few years. This development brings the exciting opportunity to test all the ideas and functionalities that so far only exist on paper and may indeed be the dawn of a future large-scale quantum internet.

CaffD

So some problems, even though they may seem very simple, are inherently exponential. Whenever we add a little bit to the problem size, the time it takes multiplies. For most of these kinds of problems, that means we quickly reach a point where it’s physically impossible for us to compute an answer.

Norbert Biedrzycki

Any computation that can be done by hand can be performed by a classical Turing machine (given enough memory). So does this mean that quantum computers are capable of computing things that are impossible to do with pen and paper?

Adam Spikey

Customer service support is not the one of most desired jobs. So this trend is a must to keep the operations going on in a digital world.

johnbuzz3

The next few years will see a dramatic advance in both quantum hardware and software. As calculations become more refined, more industries will be able to take advantage of applications including quantum chemistry.

Norbert Biedrzycki

Any classical computer (be it a theoretical Turing machine, or simply your phone) would happily solve the problem given a small enough data input. The problem on itself is not impossible for classical computers, it just quickly becomes unmanageable as the size of the input increases.

And99rew

Quantum algorithms for data analysis have the potential to speed up the computations substantially in a theoretical sense, but great challenges remain in achieving quantum computers that can process such large amounts of data.

Tom Jonezz

Ugh, lets just hope that there are techniques to design quantum computers, that are sped up by quantum algorithms.

Sounds like profitable nuclear fusion: ready in 20 years

TomK

God great there are people here with the cognitive chops to have a productive conversation on this topic.

johnbuzz3

Google, IBM, and Intel have each used this approach to fabricate quantum processors ranging from 49 to 72 qubits. Qubit quality has also improved.

Norbert Biedrzycki

In computational terms it’s hard to draw direct parallels from traditional state machines to spinning, non-local behaviors because we don’t have practical applications yet, but at a high level using traditional methods as a frame of reference on physical component capability, it’s both the speed at which information is processed and the size of that information. It’s not just supersizing bandwidth and processing power, it’s an explosion in ridiculous orders of magnitude of both.

WIRED recently did a really good primer on QC that I recommend anyone remotely interested check out – it’s extremely accessible (the whole point of the video):

https://www.youtube.com/watch?v=OWJCfOvochA

John Accural

Adversarial. Probability will suffice for this problem, machine learning will be used only if you have an unlimited amount of tries or have a large amount of historical data

Adam Spikey

Great perspective on the underlying speed and reasons for why AI has progressed. Sometimes you need to understand the past a little to more clearly see how thing will go in the future.

Norbert Biedrzycki

Thank you

johnbuzz3

Interesting piece

CabbH

More and more independent thinkers are realizing that when being an employee is the equivalent to putting all your money into one stock – a better strategy is to diversify your portfolio. So you’re seeing a lot more people looking to diversify their career

AdaZombie

And the final stage will come when these quantum computers finally surpass their conventional cousins, making it possible to create distributed networks of computers capable of carrying out calculations that were previously impossible, and instantly and securely share them around the world.

Tom Jonezz

Normal economic system: Owner hires people to help with operations and handle demand. Owner pays a portion of his revenue to these employees as wages. Employees spend these wages at other businesses to acquire things they like/need.

Economic system with AI/Robots: Employer buys/develops a bunch of AI programs and robots to handle demands for his products. Employer pays the costs for development/purchase for these tools upfront and a small regular maintenance fee. Employer keeps all other revenue. So no employees to spend money at other businesses. So fewer overall customers. So less overall economic activity. So fewer people acquiring the things they want/need. Number of people remain the same, however. Just far less of them able to see to their own needs.

Yeah…that’s how it’s going to go. AI/automation will allow all sorts of wonderful things to happen. But it will also lead to some very, very bad things. It’s best if our countries acknowledge that and make adjustments. Otherwise…bad times. Very, very bad times.

Jack666

Very interesting read. Thank you

Don Fisher

A number of high-profile technology leaders and scientists have concerns about where the technology being developed by DeepMind and companies like it can be taken. Full AI — a conscious machine with self-will — could be more dangerous than nukes

John McLean

Can’t find it now, but I saw a talk he gave wherein he said that amplifying human intelligence would just bring about a superintelligent AI faster, because you’ll have smarter AI scientists but humans will alway be limited by our meat brains.

DDonovan

Get rid of the guns and ticking time bomb and shit blowing up and the small ragtag band of revolutionaries is fighting against the AI. You go several days into the future when almost everyone is quickly assimilated against their will and all the problems plaguing the world have been fixed.

Mac McFisher

A case in point is the incredibly powerful IBM Watson, a computer with a capacity to process huge datasets that are fed into it, providing its users with more exhaustive answers. Unfortunately, there are times when the information is processed in a manner that is controversial, to put it mildly.

TomHarber

As a species, we need a successful transition to an era in which we share the planet with high-level, non-biological intelligence,. We don’t know how far away that is, but we can be pretty confident that it’s in our future. Our challenge is to make sure that goes well.

TomCat

If an autonomously driving car is driving over a road, it should avoid obstacles that stand in the way. Suppose this obstacle is a very big bush that has fallen on the road because of a storm. Suppose furthermore that one the left side of the road we have a big concrete wall, and on the right side three little children. The car will choose the road of least resistance and will kill the three little children. Is this ethically correct? No. Ethical question right there my friend, its not as easy as it looks.

JohnE3

Social awkwardness and authoritarianism would bar her from the job much earlier on if she didn’t fall into the role by lack of opposition and without a real vote from the public.

Imagine that instead of getting a free year on the job and then fighting to keep it (by hiding from voters), this campaign would have been her first real exposure on prime-time TV. She would have needed to get out there much earlier and improve or fail.

Adam Spikey

As they say, the devil is in the details. The human brain is far, far, far more complicated than the weather.

Again, this is the laws of physics you’re trying to push against in your vision here, and there’s just no way you can make it happen in reality. Your vision here is just fantasy. When it comes to complex systems everything is a important part. Take a look at chaos theory and complexity if you want to understand this.

John Accural

Interesting indeed. What about self-concious machines?

TonyHor

I’m sorry Dave, I can’t do that. – HAL 9000

See? All potential robot/human problems solved with three simple lines of code. What could possibly go wrong?

John McLean

Considering one of the cutting edge applications for semi-autonomous robots currently is flying around and bombing people I think the laws of robotics are quite clearly not of much value. At least not to the people who are actually designing and using the robots. You can say that autonomous drones should be banned, but you might as well save the wear and tear on your fingers because they aren’t going to be. What is more relevant is whether a robot designed for killing people should be programmed with any ethical safe-guards that will prevent it from killing the wrong people.

CaffD

There is no such thing as an ethical question for any of the machines listed in the article. They are all weak ai’s with predictable answers to these “ethical dilemma’s”. A machine acts objectively, relative to the human experience, ergo it will never conceive an idea, even remotely, resembling ethics.

For those needing an explanation: Moral and ethical dilemma’s have always been, and will always be, the responsibility of the designer, because it is he who imparts a moral compass to the machine.

Jacek B2

Interesting point

Adam T

In human history, every effort to diplomatically restrict military development has failed. The fear of the other side not playing by the rules guarantees that there will be no rules.

Simon GEE

Ethical machines. What about Asimov laws?

TomK

And they’re not just disruptive for computing… like the internet, they’re globally disruptive phenomenons.

Having said that, you can definetly get more initial value our of cognitive computing … although once you have a CC system and ecosystem setup that provides sensory experiences that map to 95%+ of your sensory bandwidth (think about the flow of information as digital data; how vision and sound compares to the rest)… you’ve created a system that is able to make any instance of digital data far more valuable than it currently is.

Adam Spark Two

For sure cognitive computing has the potential to transform personal banking, wealth management and trading. Consumers can expect benefits to quality of information, products and services and speed of service if the banks choose to utilize AI enhanced digital services like bots.

Andrzej44

What if you had a personal banking bot that warns you when your funds are getting low? Or that you usually buy flowers at a certain time of the year for your mother’s birthday and that you haven’t this year? With cognitive computing, this scenario might soon become a reality. Cognitive computing broadly refers to technology platforms which leverage artificial intelligence (AI) and signal processing to mimic human behavior. They are usually self-learning systems that use a variety of data, predictive analytics and natural language processing.

TomK

Interesting comments but this is aplicable not to a banking only

Andrzej44

Specific scenario is that we wanted to reduce the IT support cost (on calls, remote support, chat, online call) by replacing it with cognitive computing. Are there any more better ideas?

Simon GEE

You cannot remove IT support and expect to function. You can only attempt to streamline it and reduce it.

If you have IT support (I’m assuming helpdesk) constantly solving the same issues, just make videos for the users to seek out that instruct them how to fix themselves.

If you want to replace an entire IT support department with AI then you’re going to fail. Wait for Google to do it first. 90% of solving the unknown starts with Google.

TonyHor

Very reasonable point

Check Batin

How many movies have warned us about the dangers of “AI”.

The Terminator, The Matrix, I, Robot, Dark Star, Wall-E, 2001, Battlestar Galactica, Godzilla Vs MechaGodzilla, etc.

Changing the name from “AI” to “Cognitive” magically solves everything. Obviously…

Check Batin

For some reason it feels like, as a species, we are always going to be the best cognitive computers. And maybe the best thing to do is to keep that trait and enhance it in humans, not machines.

I have nothing against the technology. I’m glad it can help with cancer research — not so happy about insurance companies and retail collecting metadata and playing tricks for profits. The point is, are we as humans losing the edge of our biological organic computers by creating and depending on this technology. Perhaps for the first time I chuckle at the possibility of walking this road to a Singularity.

John Accural

Cognitive is biggest disruption in computing since Internet and in time, it will prove that conscious awakening thru robots is just an evolutionary step forward, in maybe twenty or twenty five years. It’s truly amazing stuff, and almost nobody’s talking about it. Ah well, soon it will be here — and BELIEVE me, the NSA and the CIA are already WELL aware of it already.

Jack666

Some say that cognitive computing represents the third era of computing: we went from computers that could tabulate sums (1900s) to programmable systems (1950s), and now to cognitive systems, which are ALMOST self-intelligent. According to R. Kurzwail we should see birth of SuperIntelligence in 2045

Mac McFisher

Cognitive systems, most notably IBM Watson, rely on deep learning algorithms and neural networks to process information by comparing it to a teaching set of data. The more data the system is exposed to, the more it learns, and the more accurate it becomes over time, and the neural network is a complex “tree” of decisions the computer can make to arrive at an answer.

Adam Spark Two

IT driven organizations need to account for this shift by providing specialized training and handing down more decision power to lower levels of customer service staff. Resolution times should in theory also be much faster because they won’t be bogged down by answering frequently asked questions. The caveat here is that whilst the customers get used to talking to chatbots, the customer service team might need to double as an educational team teaching their customers how to self-service via chat bot as well.