Each of us makes numerous decisions daily that concern essential ethical issues. Our daily choices between good and evil are largely habitual, many of them aren’t even noticed by us. However, in the world of artificial intelligence, morality is becoming increasingly problematic. Can robots sin?

Algorithm-based technologies, machine learning and process automation have come to a point in their development where morality-related questions and answers may seriously affect the development of all of our modern technologies and even our entire civilization.

The development of artificial intelligence rests on the assumption that the world can be improved. People may be healthier and live longer, customers may be ever happier with the products and services they receive, car driving may become more comfortable and safer, and smart homes may learn to understand our intentions and needs. Such a possibly utopian vision had to crystallize in the minds of IT system developers to make possible the huge technological advances that are still continuing. When we finally found that innovative products and services (computers that understand natural language, facial recognition systems, autonomous vehicles, smart homes and robots) can really be made, we began having doubts, misgivings and started to ask questions. Tech companies realize that their abstract intangible products (software, algorithms) inevitably entail fundamental, classic and serious questions about good and evil. Shown below are a few basic ethical challenges that sooner or later will force us to make definitive choices.

Revolution in law

Large US-based law-firms have recently begun working closely with ethicists and programmers who are developing new algorithms on a day-to-day basis. Such activities are driven largely by initiatives by US politicians who are increasingly aware that legal systems are failing to keep up with technological advances. I believe that one of the biggest challenges for large communities, states and nations is to modify the legislative system to regulate artificial intelligence responding to the major AI issues. We need this to feel safe and to allow entire IT-related fields to continue to grow. Technology rollouts in business must not rely exclusively on intuition, common sense and the rule that “everything which is not forbidden is allowed”. Sooner or later, the absence of proper regulations will claim victims, not only among innocent people but also among today’s key decision-makers.

Foxconn factory production line

Labor market regulation

The robotization of entire sectors of industry is now a fact of life. Fields such as logistics, big data, and warehousing are posed to steadily increase the number of installed industrial robots. There is a good reason why the use of robots is the most common theme of artificial intelligence debates. Such debates are accompanied by irrational fears. Many social groups, including politicians, may explore them for their immediate benefit. What can be done to prevent scaring people with robots becoming a habit? On the other hand, what should one do about people losing jobs to machines? Will the problems be fixed by imposing a tax on companies that use a large proportion of robots? Should employers be aware of the moral responsibility that comes with employing robots that in certain fields may even end up supervising humans? Distinctions between good and evil in this field are crucial and should not be dismissed lightly. Although automation and robotization benefit many industries, they may also give rise to exclusion mechanisms and contribute to greater social inequality. These are the true challenges of our time and we can’t pretend they are inconsequential or irrelevant.

Software control

According to the futurologist Ray Kurzweil, humanity will soon witness the emergence of a singularity, a super intelligence. We are at a point in the development of our civilization and technology where computers’ computational power is beginning to exceed that of the human brain. Many cultural anthropologists see this as crossing a line, the true birth of artificial intelligence and perhaps the beginning of AI becoming independent of humans. If their hypothesis is true, does that mean that artificial intelligence systems require special oversight? Of what kind? How does one create IT industry standards that distinguish between behaviors, projects and production that are and are not acceptable? According to Jonathan Zittrain, Law Professor at Harvard University, the growing complexity of IT systems and their increasing interconnectivity makes control over them virtually impossible. And this may cause a number of problems, including difficulties with defending and upholding certain moral imperatives.

Ray Kurzweil: The Coming Singularity

Autonomous vehicles make choices

A few months ago I wrote of the ethical issues associated with the appearance of autonomous vehicles on our roads. I raised the issue of cars having to make ethical choices on the road, which consequently places responsibility in the hands of specific professionals, such as the programmers who write algorithms and the CEOs who run car manufacturing companies. When a child runs out onto a street, a self-driving car is faced with the choice, which it has to make as if by itself. The choices it makes actually depend on the algorithms hardwired into its system. Theoretically speaking, there are three options available in what we might call ethical programming. One is that what counts in the case of an accident or a threat to human life is the collective interest of all accident participants (i.e. the driver, the passengers and the child on the road). Another seeks to protect the lives of pedestrians and other road users. Yet another gives priority to protecting the lives of the driver and the passengers. The actual response depends fully on the algorithm selected by a given company. Manufacturers will be looking to choose the most beneficial option in terms of their business interests, terms of insurance and ethical principles. No matter which choice they make, they will have to protect their algorithm-writing programmers against liability.

How to retain privacy rights?

Privacy is an exceptionally sensitive issue in our times. Our personal data is processed incessantly by automated business and marketing systems. Our social security numbers, names as well as internet browsing and purchase histories have become an object of interest of all world corporations. In this globalized world, we are subject to constant pressures, which are less or more subtle, to make our data available every step of the way.

What is privacy today and what right do we have to protect it? This is one of the key questions regarding our lives. In the time of social media, in which every piece of information about our lives has been practically “bought out” permanently by major portals, our privacy has been redefined. As we move into an electronics-filled smart home in which all the devices learn about our needs, we must realize that their knowledge consists of specific data that can be processed and disclosed. I therefore believe that the question of whether our right to privacy will in time become even more vulnerable to external influences and social processes is fundamental.

Bots posed to rule

Bots these days are made to compete in tournaments. All participating bots are given the Turing test, which is a classic way of checking whether one is dealing with a computer or a human. No one should be surprised that the best bots exceed 50 percent success rates. This means that half of the people participating in the experiment are unable to tell whether they have communicated with a man or a machine. While such laboratory competitions may appear to be innocent fun, the widespread use of bots in real life raises a number of ethical questions. Can a bot cheat or use me? Can a bot manipulate me and influence my relationships with my co-workers, service providers and supervisors? And when I feel cheated, where do I turn for redress? After all, accusing a bot of manipulation sounds totally preposterous. Machines are increasing their presence in our lives and beginning to play with our emotions. Here, the question of good vs. evil acquires particular significance.

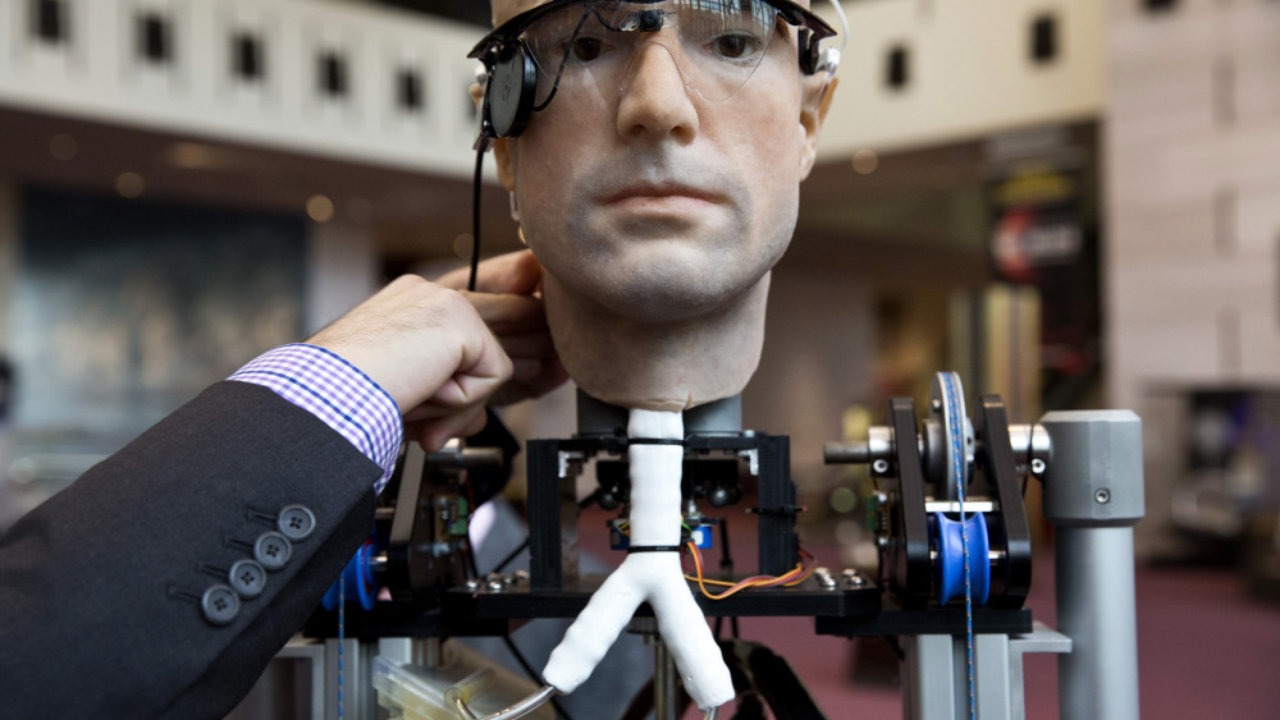

Frank – a bionic robot built of prostheses and synthetic organs

Will robots tell good from evil?

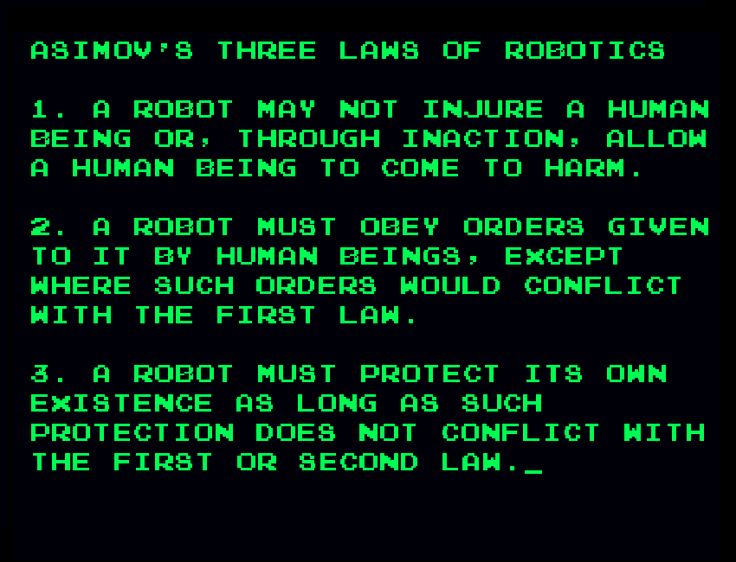

The most interesting ethical dilemmas specifically concern robotization. The questions are analogous to those asked with regard to autonomous vehicles. Today’s robots are only learning to walk, answer questions, hold a beverage bottle, open a fridge and run. Some are more natural than others at these tasks. Robots will not only replace us in many jobs. They can really be helpful, in e.g. taking care of the elderly, where constant daily assistance is required. Much time will pass before robots become social beings and “persons” protected with special rights, although even today such issues are being raised. Today’s robot manufacturers already face challenges that entail choosing between good and evil. How does one program a robot to always do good and never harm people? To help us under all circumstances and never stand in the way? If we are to trust technology and artificial intelligence, we must make sure that machines follow a plan. What does that mean in the case of a robot? Imagine we program one to dispense medications to a patient at specific times. Then imagine the patient refuses to take them. What is a robot to do? Respect the patient’s choice? Who will take responsibility and bear the consequences of the machine’s choice under such circumstances?

Isaac Asimov’s three laws of robotics

A time of new morality?

I have outlined above some of the most critical fields of artificial intelligence development in which ethical issues have, or are going to take center stage. However, a slew of other questions exist that I was unable to ask due to space constraints. Current technological breakthroughs bring with them not only unquestionable benefits that make our lives more comfortable and better. They also pose a huge challenge to our value system. Along with hopes, they bring anxieties over a shakeup of the long-solidified lines between good and evil. Perhaps the revolution unfolding in our time will lead to a global change in our traditional view of ethics.

Related articles:

– A machine will not hug you … but it may listen and offer advice

– Can machines tell right from wrong?

– Machine Learning. Computers coming of age

– What a machine will think when it looks us in the eye?

– Fall of the hierarchy. Who really rules in your company?

– The brain – the device that becomes obsolete

– Modern technologies, old fears: will robots take our jobs?

Oniwaban

AI is nothing what people make it out to be. It doesn’t have self-awareness, nor can it outgrow a human. Up until this day there has never been a program demonstrated that can grow & develop on its own. AI is simply a pattern, or a set of human made instructions that tell the computer how to gather & parse data. GPT-3 (OpenAI) works very similar to a Google search engine. It takes a phrase from one person, performs a search on billions of website articles and books to find a matching dialog, then adjusts everything to make it fit grammatically. So in reality this is just like performing a search on a search, on a search, on a search, and so on….

TomCat

That DARPA funding could theoretically seed the rescue-robot industry, or it could kickstart the killer robot one. For Gubrud and others, it’s all happening much too fast: the technology for killer robots, he warns, could outrun our ability to understand and agree on how best to use it. Are we going to have robot soldiers running around in future wars, or not? Are we going to have a robot arms race which isn’t just going to be these humanoids, but robotic missiles and drones fighting each other and robotic submarines hunting other submarines?

TomK

Asking people “what they fear most” is in itself probably a good example of a fear-focused AI perspective. In my view, focus on practical problemsolving and pragmatic regulation is what we need most.

Norbert Biedrzycki

CabbH

Robots will also take over the more repetitive tasks in professions such as law, with paralegals and legal assistants facing a 94% probability of having their jobs computerized. According to a recent report by Deloitte, more than 100,000 jobs in the legal sector have a high chance of being automated in the next 20 years.

Norbert Biedrzycki

Only few occupations are fully automatable but 60% of all have at least 30% technically automatable activities. Automation will change far more occupations— by, partially automating them—than it will replace

Adam Spikey

What’s better than the money saved is that we can save people from a boring and mechanical part of their jobs

Norbert Biedrzycki

Technical jobs automation potential by McKinsey & Company. Japan 55%, India 52%, China 51%, US 46% of all current jobs

Tom299

do you have data on other countries?

Don Fisher

I had a home lawn mowing robot around 2000 or 2001. Different from a commercial system. E.g., at iRobot we had a commercial cleaner first, and the key was to get it to replace three different manually pushed machines for three different steps in cleaning a store; sweeping, wet cleaning, and polishing. Our fully automated machine used a 32 bit processor back in the 1990’s. The automatic version never got adopted but we did license the three-in-one machine to become a manually operated machine needing one third of the labor and they were commercially introduced. The Roomba for the home was an entirely different machine (8 bit processor at launch in 2002), and a very different cleaning regime (carpets were the principal target, not mopping and polishing tiled floors). I think the home lawnmowers are likewise very different in scope and capability than is needed for grounds keeping.

TomaszK1

Great insight. I think chat bots will be a major industry one day, but you pointed out exactly why they aren’t yet. They’re not doing anything that’s “better, faster, or cheaper” yet.

The true usefulness of robots will come when they can solve complex, multi-step user queries, faster than any app can. Right now most robots can only do basic one or two step tasks that can be done faster by an app.

Once developers start thinking up things that a robot could do much more efficiently than any other app or process currently available THEN they will receive mass adoption. But for now, we’ll have to wait.

TomK

God great there are people here with the cognitive chops to have a productive conversation on this topic.

TonyHor

If we want to use legislation to regulate robotic technology, we’re going to need to establish better definitions than what we’re operating with today. Even the word ‘robot’ doesn’t have a good universal definition right now.

TommyG

Not yet my friend, not yet

TomCat

At some point in time, an AI will become intelligent. And no longer be a machine only.

TomK

Lets say we get robots to the point where they can do everything. Make houses, make the internet work, mine resources so everyone gets an equal amount. No corruption, no anything, just robots doing literally everything we see as valuable. The robots also aren’t evil, they just are. Their goal is to make humans happy, that’s their sole objective. As such they build and make everything something cool.

CaffD

Seems pretty straightforward to believe that there are things we haven’t discovered yet. As for her metaphysical claims, she is technically correct that we can’t assume that empiricism is correct however it is worth noting that anything that is not empirically measurable will be unknowable. Thus anything you care to consider about an unknowable and possibly non-existent “something” is going to be a guess that cannot be confirmed.

johnbuzz3

You can have the best of all worlds in the future of you want. Having synthetic body and high density DNA brain housed within that synthetic body, and your mind is constantly synced to the cloud in the event of unforseen accident. Future is so bright.

John McLean

Very interesting read

Karel Doomm2

While there are concerns for machines replacing people in the workforce, the benefits are tempting. Imagine how a machine that doesn’t need sleep or food, doesn’t have prejudices that we humans so often have could change the way we treat people who are sick and vulnerable. With some preparation and forethought, we can make sure the human touch stays relevant in majority of fields

Don Fisher

Once upon a time on Tralfamadore there were creatures who weren’t anything like machines. They weren’t dependable. They weren’t efficient. They weren’t predictable. They weren’t durable. And these poor creatures were obsessed by the idea that everything that existed had to have a purpose, and that some purposes were higher than others.

These creatures spent most of their time trying to find out what their purpose was. And every time they found out what seemed to be a purpose of themselves, the purpose seemed so low that the creatures were filled with disgust and shame.

And, rather than serve such a low purpose, the creatures would make a machine to serve it. This left the creatures free to serve higher purposes. But whenever they found a higher purpose, the purpose still wasn’t high enough.

So machines were made to serve higher purposes, too. And the machines did everything so expertly that they were finally given the job of finding out what the higher purpose of the creatures could be.

The machines reported in all honesty that the creatures couldn’t really be said to have any purpose at all. The creatures thereupon began slaying each other, because they hated purposeless things above all else. And they discovered that they weren’t even very good at slaying.

So they turned that job over to the machines, too. And the machines finished up the job in less time than it takes to say, “Tralfamadore.”

Kurt Vonnegut – “The Sirens of Titan”

John McLean

Healthcare, education, and living expenses continue to rise, despite automation.

This won’t lower costs for everything. It will lower costs for some things, like sneakers with lights in the heel.

Adam T

Musk, Wozniak and Hawking urge ban on AI and autonomous weapons: Over 1,000 high-profile artificial intelligence experts and leading researchers have signed an open letter warning of a “military artificial intelligence arms race” and calling for a ban on “offensive autonomous weapons”.

TonyHor

AI and computer science, particularly machine learning, are increasingly able to help out research in the social sciences. Fields that are benefiting include political science, economics, psychology, anthropology and business / marketing. Understanding human behaviour may be the greatest benefit of artificial intelligence if it helps us find ways to reduce conflict and live sustainably. However, knowing fully well what an individual person is likely to do in a particular situation is obviously a very, very great power. Bad applications of this power include the deliberate addiction of customers to a product or service, skewing vote outcomes through disenfranchising some classes of voters by convincing them their votes don’t matter, and even just old-fashioned stalking. It’s pretty easy to guess when someone will be somewhere these days.

John Accural

I think if the car is hypothetically 100% capable of following EVERY rule when driving, and it will statistically NEVER be at fault in an accident where it’s functioning correctly, then it should absolutely prioritize its driver’s safety over the pedestrians. Would it suck to see “5 pedestrians killed in self-driving car accident”? Absolutely… but if those 5 people riskily ran out into the road when they shouldn’t have, and they accepted the risk in doing that, it’s absolutely wrong for the car to kill the driver to attempt to save them.

The way I heard it put by an auto maker that sort of makes the most sense from a realistic point of view is “if you can 100% GUARANTEE that you can save a life, let it be the driver.” i.e. in the car vs pedestrians scenario, it would err on the side of the driver, hitting the pedestrians.

JohnE3

If robots might take over the world, or machines might learn to predict our every move or purchase, or governments might try to put the blame robots for their own unethical policy decisions, then why would anyone work on advancing AI?

Our society faces many hard problems, like finding ways to work together yet maintain our diversity, avoiding and ending wars, and learning to live truly sustainably (where our children consume no more space and time than our parents, and no more other resources than can be replaced in a lifetime) while still protecting human rights. These problems are so hard, they might actually be impossible to solve. But building and using AI is one way we might figure out some answers. If we have tools to help us think, they might make us smarter. And if we have tools that help us understand how we think, that might help us find ways to be happier.

TommyG

Intersting point to the discussion. Than you

DDonovan

Good point !!! Going to repost what ponieslovekittens wrote regarding this topic though, which actually goes against your point and is worth taking into account ponieslovekittens: Self developing systems grow by interacting with their environment and adjusting their behavior in response to it. When we interact with them, we become part of the collective system that determines their behavior. When I first saw the ‘kick the robot dog’ video, my initial thought was not to feel bad for the dog. It was awareness of the fact that the whole point of kicking the dog is that it learns to remain upright by being subjected to physical forces and responding to them. Hitting the guy back would be a viable response that would contribute to the robot’s continuing to remain upright. It should come as no surprise that if when we act upon intelligent systems, they might choose to act back upon us. At some point, an artificial intelligence is…intelligent. It is no longer a machine. I advise all of humanity that when these machines do cease to become machines and do become self aware…it is in humanity’s best interest that we have already established a track record of treating them honorably. Leave robots to the Japanese. It would be better for all of us if it’s the cuddle-bots and elder care bots and sexbots that become self aware, rather than DARPA war machines.

Simon GEE

Very nice point of view. I support fully your statement

Mac McFisher

I don’t think post-scarcity will completely eliminate exchanges of value. Particularly when you’re talking about giant logistical concerns of the entire nation. (Like building starships to link your interstellar civilization.) I don’t think they’re mutually exclusive.

Jack666

Not only to the Japanese. China’s Alibaba is pumping 15 bln USD into AI related R&D. EU/ US might be shortly behind

TomCat

Right

Adam Spikey

Think this vision has almost a century with Von Newman and it’s Self Replicating machines. The big difference nobody doubts its is going happen now.

CaffD

We are surrounded by hysteria about the future of Artificial Intelligence and Robotics. There is hysteria about how powerful they will become how quickly, and there is hysteria about what they will do to jobs.

http://rodneybrooks.com/the-seven-deadly-sins-of-predicting-the-future-of-ai/

TomCat

Life is defined by the ability to reproduce, which robots (for now) cannot. By reproduce I include cells, if you want to talk about sterile people.

I think you mean sentience or self awareness or some such.

I am firmly of the belief that evolution designs the best systems that are most efficient and cost the least energy. So I think the only way a robot could be intelligent would be if it had a brain. Metalic molecules cannot do the things molecules of carbon and hydrogen, etc, do in neurons. So to become living, the robot would have to use such carbon-hydrogen systems. Hybridizing at least some biological components. At that point, it would be alive.

TommyG

Very vital point. 100% support

Mac McFisher

In a post-scarcity economy, sure, that’s trivial. But the hard part is the transition, there’s not a clear way to get from here to there without a lot of misery in between.

John McLean

I was wondering this after reading an article about technological innovations in the future. Does the Bible/Christianity account for this type of question? Do you have to be married to the robot first or..?

TomK

can software violate a magic sky fairy law? If the developer allows it

Adam Spark Two

Our weakness is that we assume technology is neutral, but it was obviously made for wars.

Marcuse totally saw this coming

Check Batin

The implications of AI are still being worked out as technology advances at a dizzying speed. Christians, like everyone else, or other religions, are asking questions about what this means. But one thing people of faith want to affirm most strongly is that technology has to serve the good of humanity – all of it, not just the privileged few. Intelligence – whether artificial or not – which is divorced from a vision of the flourishing of all humankind is contrary to God’s vision for humanity. We have the opportunity to create machines that can learn to do things without us, but we also have the opportunity to shape that learning in a way that blesses the world rather than harms it.

Jacek B2

What if God left us? And we create our reality?

DDonovan

No doubt, that’s why it is only the monkey part of my brain that feels bad. The frontal lobe of the cortex is what determines intent and, in this case, that has the constructive goal of testing Spot’s ability to perform as a military/disaster rescue bot.